From Data Deluge to Decisions: Solving Precision Agriculture's Information Overload Problem

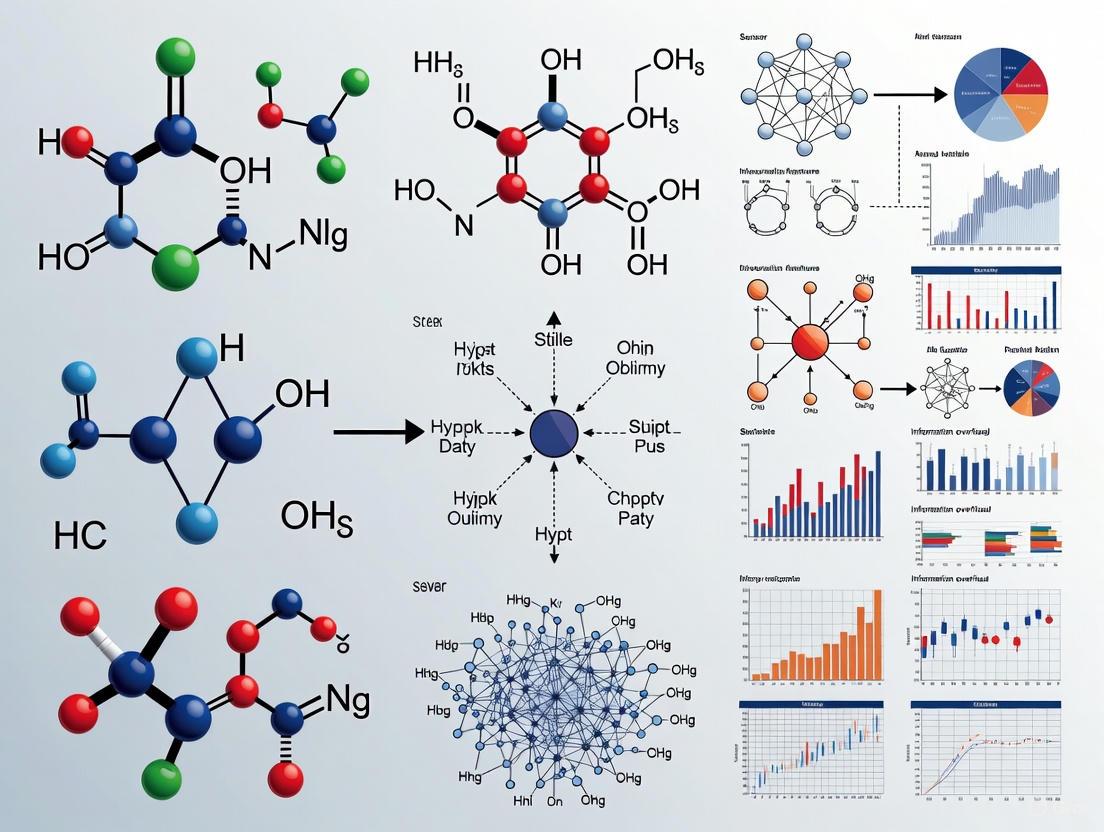

The proliferation of IoT sensors, drones, and satellite systems in precision agriculture is generating millions of data points daily, creating a critical challenge of information overload that can paralyze decision-making. This article provides a comprehensive framework for researchers and agricultural technology developers to navigate this complexity. It explores the foundational causes and scale of data overload, presents methodological advances in AI and data fusion for transforming raw data into actionable insights, offers strategies for optimizing data integration and management, and establishes validation frameworks for comparing solution efficacy. The synthesis of these areas provides a clear path toward building more interpretable, efficient, and trustworthy agricultural sensor systems that enhance, rather than hinder, farm management.

From Data Deluge to Decisions: Solving Precision Agriculture's Information Overload Problem

Abstract

The proliferation of IoT sensors, drones, and satellite systems in precision agriculture is generating millions of data points daily, creating a critical challenge of information overload that can paralyze decision-making. This article provides a comprehensive framework for researchers and agricultural technology developers to navigate this complexity. It explores the foundational causes and scale of data overload, presents methodological advances in AI and data fusion for transforming raw data into actionable insights, offers strategies for optimizing data integration and management, and establishes validation frameworks for comparing solution efficacy. The synthesis of these areas provides a clear path toward building more interpretable, efficient, and trustworthy agricultural sensor systems that enhance, rather than hinder, farm management.

Understanding the Data Tsunami: The Scale and Impact of Agricultural Information Overload

Frequently Asked Questions (FAQs)

FAQ 1: What are the primary sources contributing to such high volumes of daily data on a modern research farm? A modern precision agriculture research farm generates data from a dense network of interconnected sensors and systems [1] [2]. The primary sources include:

- IoT Sensor Networks: Wireless sensors deployed across fields continuously monitor parameters like soil moisture, temperature, nutrient levels (nitrogen, phosphorus, potassium), humidity, and solar radiation [3] [1] [4]. A single study noted the use of grids requiring hundreds to nearly a thousand sensor nodes for comprehensive coverage [4].

- Remote Sensing: Satellites and drones capture high-resolution, multispectral imagery (e.g., NDVI for crop health) over vast areas at regular intervals [5] [1]. This provides panoramic, field-level data on plant stress and canopy conditions.

- Automated Machinery: GPS-guided tractors, planters, and harvesters with integrated sensors generate real-time data on location, fuel consumption, yield, and application rates for seeds, fertilizer, and water [1] [2].

- Weather Stations: On-site stations provide hyper-local meteorological data [3].

This infrastructure leads to high-velocity, high-volume data streams that require robust management systems [2].

FAQ 2: Our research team is experiencing latency in our data pipeline, causing delays between data collection and the availability of analyzed results. What are the common bottlenecks? Latency is a significant barrier to impacting daily farm management decisions [5]. Common bottlenecks include:

- Data Transmission Delays: In remote or underdeveloped regions, limited rural broadband or mobile internet access can hinder the real-time transmission of data from field sensors to central cloud platforms [1].

- Centralized Cloud Processing: Sending all raw data to a centralized cloud for processing can introduce latency, especially when dealing with terabytes of information [2].

- Complex Data Processing: Machine learning models for yield prediction or disease detection require substantial computational power and time to process large, diverse datasets [1] [6].

Solution: Implementing edge computing is a key strategy. By processing data at the point of acquisition (on the device or a local gateway), you can reduce latency to within hours of acquisition and transmit only the most actionable insights to the cloud [5] [2].

FAQ 3: How can we effectively validate the accuracy of data from low-cost NPK and soil moisture sensors against laboratory-grade standards? Validating field sensor data is crucial for reliable research. A systematic methodology is required.

- Protocol: A reviewed study on NPK sensor deployment recommends a comparative analysis where soil samples are collected from the exact locations of the sensor nodes. These samples are then analyzed using traditional laboratory methods. The sensor readings are directly compared to the lab results to calculate an error rate [4].

- Reported Accuracy: The aforementioned study reported an error rate of 8.47% for its NPK sensor nodes when compared to laboratory controls, which was considered a relatively satisfactory outcome for field deployment [4].

- Calibration: Cloud-based software platforms now exist that use IoT to remotely monitor and update sensor calibrations, helping to maintain optimal performance and accuracy over time [7].

FAQ 4: We are facing challenges with data interoperability. Our equipment and sensors from different manufacturers output data in proprietary formats. How can we integrate this for a unified analysis? Data interoperability is a recognized challenge in agricultural technology [2]. Proprietary data formats from machinery and sensors can create silos and hinder analysis.

- Solution: The industry is moving towards API-driven integrations and open platforms [1]. Using open APIs (Application Programming Interfaces) allows different software systems and devices to communicate and share data seamlessly. For instance, agri-tech companies offer APIs for satellite, weather, and other data streams, enabling researchers to build integrated, custom solutions [1].

- Data Infrastructure: A robust data infrastructure must support integration from various sources (sensors, satellites, machinery) to enable unified analytics [8].

FAQ 5: What data visualization best practices are most critical for helping researchers quickly identify trends and anomalies in massive agricultural datasets? Effective data visualization is key to making complex data understandable and actionable.

- Know Your Audience: Tailor visualizations to the needs and expertise of the research team. A technical team may require more detail than stakeholders focused on high-level outcomes [9].

- Choose the Right Chart: Use line charts for trends over time, bar charts for comparing categories, and scatter plots for showing correlations between two variables. Avoid pie charts when you have many small segments, as they can be difficult to interpret [9].

- Keep it Simple: Avoid clutter and unnecessary details. Use minimal, strategic color schemes with high contrast to highlight important data points and make the visualization accessible [9] [10].

- Make it Interactive: Incorporate tooltips, filters, and drill-down capabilities. This allows researchers to engage with the data, ask specific questions, and explore various angles in real-time [9].

Troubleshooting Guides

Problem: Data Integrity and Sensor Failure in Field-Deployed Wireless Sensor Networks (WSNs)

1. Identifying the Issue:

- Symptoms: Missing data streams, data values stuck at a constant level, readings that are physiologically impossible (e.g., soil moisture at 200%), or data that consistently deviates from calibrated norms.

- Diagnosis: Check the sensor network's dashboard for offline node alerts. Physically inspect suspected nodes for power supply issues (e.g., depleted solar charge, damaged cables), environmental damage (e.g., water ingress, insect nests), or physical obstruction.

2. Experimental Validation Protocol: To systematically identify and quantify sensor drift or failure, follow this experimental protocol adapted from WSN research [4]:

- Step 1: Baseline Laboratory Calibration. Before field deployment, calibrate all sensors against standard solutions or controlled media and document baseline performance.

- Step 2: Strategic Field Placement. Deploy sensors in a layout (e.g., grid, tessellation) that includes strategic node redundancy, as informed by algorithms like the Redundant Node Deployment Algorithm (RNDA), to extend network lifespan and provide validation points [4].

- Step 3: Concurrent Soil Sampling. For soil nutrient (NPK) and moisture sensors, take concurrent physical soil samples from the immediate vicinity of the sensor nodes. This should be done at multiple time points during the crop cycle.

- Step 4: Laboratory Analysis. Analyze the soil samples in a lab using standard chemical analysis methods (e.g., for NPK) or gravimetric methods (for moisture) to establish ground truth values [4].

- Step 5: Data Comparison and Error Calculation. Compare the field sensor readings with the laboratory results. Calculate the error rate for each sensor. A study using this method reported an average NPK sensor error rate of 8.47% [4].

3. Resolution Steps:

- Recalibrate: Recalibrate sensors that show a consistent bias but are otherwise functional, using the lab data as a reference.

- Replace: Replace sensors with high error rates or those that have failed completely.

- Leverage Redundancy: Use data from redundant nodes to fill gaps and maintain data continuity.

Problem: Data Overload and Inefficient Analysis Leading to "Analysis Paralysis"

1. Identifying the Issue:

- Symptoms: Inability to process incoming data streams in a timely manner, lack of clear insights from the data, or difficulty in translating data into actionable decisions.

2. Resolution Strategy:

- Implement a Tiered Data Architecture: Use a combination of edge computing and cloud platforms. At the edge, pre-process data to perform initial filtering, compute summary statistics, and trigger immediate alerts (e.g., irrigation needed), reducing the volume of data sent to the cloud [5] [2]. In the cloud, use scalable storage (e.g., data lakes like AWS S3) for deep historical analysis and training complex machine learning models [2].

- Adopt AI-Driven Decision Support: Integrate machine learning models that can automatically identify patterns, predict outcomes like yield or pest outbreaks, and provide field-specific, actionable recommendations to researchers [1] [6].

- Focus on Key Performance Indicators (KPIs): Use software solutions that leverage cloud analytics and KPIs to help users monitor critical metrics and make data-driven decisions without being overwhelmed by raw data [7].

The following table summarizes the data generation potential and key metrics from various sources used in precision agriculture research.

Table 1: Data Source Metrics in Precision Agriculture Research

| Data Source | Measured Parameters | Data Volume & Frequency Context | Reported Impact / Accuracy |

|---|---|---|---|

| IoT Sensor Networks [3] [1] [4] | Soil moisture, temperature, NPK nutrients, humidity, EC, pH, solar radiation. | Continuous, real-time data streams; layouts can require >900 nodes per field [4]. | NPK sensor error rate of ~8.47% vs. lab control [4]. |

| Satellite & Drone Imagery [5] [1] | NDVI, EVI, crop health, canopy cover, soil moisture. | Frequent, high-resolution images over large areas; enables field-level resolution at global scale [5]. | Increases yield prediction accuracy by up to 30% vs. traditional methods [1]. |

| AI & Machine Learning [1] [6] | Predictive models for yield, disease, pests; optimization of inputs. | Processes high-volume, diverse datasets from multiple sources. | Can improve crop yield by 15-20% and reduce overall investment by 25-30% [6]. |

| Automated & Connected Systems [1] [7] | Machinery performance, input application logs, supply chain traceability. | Generates operational data from every action and movement. | Improves operational efficiency by 20-25% [6]. |

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Precision Agriculture Sensor Research

| Item / Solution | Function in Research Context |

|---|---|

| Wireless Sensor Nodes (NPK, moisture, temp) [4] | The core data collection unit for in-situ, real-time monitoring of soil macronutrients and environmental conditions. |

| Calibration Standards & Solutions [7] [4] | Used for baseline calibration and periodic re-calibration of sensors to ensure data accuracy and validity against known references. |

| NIR Analyzers & Cloud Management Software [7] | Forage quality analysis (e.g., via AGRINIR); cloud software (e.g., NIR evolution) enables remote diagnostics and calibration management. |

| Edge Computing Gateway Device [5] [2] | A local device for pre-processing data at the acquisition point, reducing latency and bandwidth use by sending only insights to the cloud. |

| API Integration Tools [1] | Software tools to connect disparate systems and data streams (e.g., Farmonaut's Satellite & Weather API), enabling unified data aggregation. |

| Cloud-Based Data Analytics Platform [8] [7] | A platform (e.g., FIELD trace) for storing, integrating, and analyzing massive datasets, often featuring visualization dashboards and KPI tracking. |

| Globularin | Globularin, CAS:58286-51-4, MF:C24H28O11, MW:492.5 g/mol |

| Malformin A1 | Malformin A1, CAS:3022-92-2, MF:C23H39N5O5S2, MW:529.7 g/mol |

Experimental Data Management Workflow

The diagram below outlines a robust experimental workflow for managing high-volume sensor data, from collection to actionable insight, incorporating troubleshooting checkpoints.

Technical Support Center

Troubleshooting Guides

FAQ: Data Integration and Management

Q1: Our research generates data from multiple sensor brands and formats, creating integration headaches. How can we create a unified dataset for analysis?

A: This is a common challenge arising from a lack of interoperability. The solution involves a multi-step process of data harmonization.

- Step 1: Audit Data Sources. Catalog all data sources (e.g., soil sensors, drones, satellite imagery, weather stations) and their output formats, protocols, and metadata schemas.

- Step 2: Establish a Standardized Vocabulary. Define and adopt common data standards and formats for your research group. Leverage existing agricultural data standards like those from the International Committee on Animal Recording (ICAR) where applicable [11].

- Step 3: Implement an Integration Platform. Utilize a centralized data platform or data lake that can ingest diverse data types. Platforms like FarmOS offer open-source models for integrating real-time sensor data [12].

- Step 4: Automate Data Pipelines. Create automated workflows (e.g., using scripts or platforms like Polly for biomedical data) to clean, standardize, and harmonize incoming data into an AI-ready format [13].

Q2: We are overwhelmed by data volume and alerts from precision sensors. How can we distinguish critical information from background noise?

A: This issue, known as data overload, reduces the effectiveness of monitoring systems [14]. Implement a tiered alert system.

- Solution: Design a three-level alert framework within your data management platform:

- Level 1 (Critical): Requires immediate action (e.g., impending animal birth, severe pest outbreak). Configure for high-priority notifications.

- Level 2 (Important): Requires action within a defined period (e.g., soil moisture dropping below a threshold, early signs of disease).

- Level 3 (Informational): For tracking and record-keeping only (e.g., average daily rumination, completed growth stage).

- Additional Configuration: Ensure alert thresholds are not fixed. They should be adjustable based on factors like season, herd condition, and specific research goals [14].

Q3: How can we ensure data sovereignty and security when consolidating information onto a unified platform?

A: Data sovereignty is a critical ethical and operational concern, ensuring researchers and their partners retain control over their data [12].

- Action Plan:

- Select Platforms with Transparent Governance: Choose platforms that have clear, transparent data governance policies. These should explicitly state data ownership, usage rights, and security measures [15].

- Implement Access Controls: Use role-based access controls to ensure only authorized personnel can view, edit, or share specific datasets.

- Adopt Secure Data Transfer Protocols: For collaborative projects, use open-source protocols like FarmStack that enable secure and consented data transfers between parties, maintaining control at the source [12].

- Verify Compliance: Ensure the platform provider complies with relevant security standards (e.g., ISO 27001) and data protection regulations [15].

FAQ: Experimental Reproducibility

Q4: Our experiments are difficult to reproduce due to inconsistent data collection methods across lab teams. What is the best practice?

A: The root cause is often incomplete metadata and non-standardized protocols. Adopting the FAIR Principles (Findable, Accessible, Interoperable, Reusable) is the recommended solution [13].

- Methodology:

- Findable: Create detailed and persistent digital identifiers for each dataset.

- Accessible: Store data in a repository with clear access protocols.

- Interoperable: Use standardized formats and rich metadata to describe the experimental conditions, sensor types, calibration data, and collection protocols. This contextual information is essential for reproducibility [11] [13].

- Reusable: Provide comprehensive documentation on the experimental design and data processing steps.

Q5: Sensor-derived traits for genetic studies are fragmented. How can we improve their usability in breeding programs?

A: Integrating novel sensor-based traits into genetic evaluations requires a structured roadmap [11].

- Protocol:

- Trait Definition: Precisely define the novel trait derived from sensor data (e.g., "daily activity count," "thermal stress index").

- Quality Control: Establish robust, automated quality control (QC) methodologies to filter out erroneous sensor readings.

- Data Harmonization: Centralize data from different sensor brands and farms using standardized data-sharing agreements and infrastructure [11].

- Genetic Analysis: Calculate heritability estimates for the novel traits to confirm they have a genetic basis and are suitable for selection.

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Components for a Unified Agricultural Data Platform

| Component | Function |

|---|---|

| Centralized Data Infrastructure (Data Lake/Warehouse) | A repository for storing raw and processed data from all sources (sensors, satellites, genomics). It breaks down silos and enables holistic analysis [13]. |

| Interoperability Standards (e.g., ADAPT, ISO 11783) | Common data standards and APIs that allow different machines and software platforms to communicate and exchange data seamlessly, preventing fragmentation [12]. |

| FAIR Principles Implementation Framework | A set of guidelines to make data Findable, Accessible, Interoperable, and Reusable, directly combating reproducibility issues [13]. |

| IoT & Cloud-Based Monitoring Systems | Networks of connected sensors and cloud platforms (e.g., AWS, Azure) that enable real-time data collection, transmission, and storage for timely intervention [15] [16]. |

| Data Sovereignty Protocol (e.g., FarmStack) | An open-source protocol that enables secure and consented data sharing, ensuring that data owners (researchers, farmers) control how their data is used [12]. |

| Digitoxose | Digitoxose, MF:C6H12O4, MW:148.16 g/mol |

| Cellooctaose | Cellooctaose|For Research |

Experimental Protocols and Workflows

Protocol 1: Integration of Multi-Source Sensor Data for Phenotyping

Objective: To create a unified, clean dataset from disparate sensors (e.g., soil moisture, drone-based NDVI, weather stations) for plant or animal phenotyping.

Materials: Data from various sensors, a centralized data platform (e.g., data lake), data processing software (e.g., Python/R scripts, Polly platform [13]), and standardized metadata templates.

Methodology:

- Data Collection: Collect raw data from all available sources according to your experimental design.

- Metadata Annotation: Simultaneously, complete a standardized metadata template for each dataset, detailing the sensor model, calibration date, geographic location, environmental conditions, and data units.

- Data Ingestion: Ingest both raw data and metadata into the centralized data platform.

- Harmonization & Cleaning: Run automated pipelines to:

- Convert all data to standardized units and formats.

- Flag and handle missing or erroneous values based on QC rules.

- Align all data streams to a common timestamp.

- Validation: Output a harmonized dataset ready for statistical analysis or machine learning.

The following workflow diagram illustrates the path from fragmented data to unified insights:

Protocol 2: Implementing a Tiered Alert System for Livestock Monitoring

Objective: To reduce data overload and prioritize interventions by filtering alerts from precision livestock farming sensors (e.g., smart collars, ear tags).

Materials: Livestock sensor system, a data management platform with alert configuration capabilities, defined animal health and welfare thresholds.

Methodology:

- Define Thresholds: Collaboratively define physiological and behavioral thresholds (e.g., for rumination, heart rate, activity) for the three alert levels in consultation with animal scientists.

- System Configuration: Program the data platform to apply these thresholds to the incoming real-time sensor data [14] [15].

- Notification Setup: Configure delivery channels for each level (e.g., Level 1: Push notification & SMS; Level 2: Email; Level 3: In-app log).

- Pilot Testing & Calibration: Deploy the system on a small scale, monitor the alert frequency and accuracy, and refine the thresholds to minimize false positives.

- Full Deployment & Training: Roll out the system to the entire research operation and train personnel on appropriate responses to each alert level.

The logic behind a tiered alert system is shown below:

Data Presentation

Table: Key Challenges and Open-Source Responses in Agricultural Data Management [12]

| Challenge Area | Description | Open-Source Response / Potential |

|---|---|---|

| Data Fragmentation | Agricultural data exists in silos across various platforms and formats, hindering comprehensive analysis. | Open-source data standards (e.g., ADAPT, ISO 11783) and APIs facilitate interoperability and seamless data exchange. |

| Data Sovereignty & Access | Farmers and researchers lack control over their data; smallholders often excluded from technology benefits. | Open-source protocols (e.g., FarmStack) and platforms prioritize user ownership and consented data sharing. |

| Cost of Technology | Proprietary software and hardware are often prohibitively expensive for many research institutions. | Open-source tools eliminate licensing fees, reducing financial barriers and enabling wider adoption. |

| Digital Literacy | Limited understanding among stakeholders to use digital technologies and share data effectively. | Open-source educational resources and community-led initiatives support capacity building and knowledge sharing. |

Troubleshooting Guides

FAQ: Addressing Data Overload in Precision Agriculture Sensor Systems

1. What are the core facets of data overload in precision agriculture research beyond simple volume? Beyond the sheer volume of data, researchers must contend with Velocity, Variety, and Veracity. Velocity refers to the high speed at which data is generated; the average farm can produce over 500,000 data points daily, a figure expected to grow significantly [17]. Variety describes the extreme diversity of data types, from satellite imagery and soil sensor readings to weather forecasts and IoT device outputs [18]. Veracity concerns the reliability and quality of data, which can be compromised by inconsistent collection methods or inaccurate sensors [18]. Managing these three facets is crucial for transforming raw data into actionable insights.

2. We are experiencing "data overload" from numerous disconnected systems. How can we achieve a unified view? This is a common problem described as having "every color of paint, but no canvas" [17]. The solution is to implement a centralized farm management platform that can aggregate data from multiple sources. You should:

- Select platforms with open APIs: Prioritize technologies from providers that offer open APIs (Application Programming Interfaces), which allow different systems to communicate and share data seamlessly [17].

- Use integrated dashboards: Platforms like Agworld, Granular, or specialized research dashboards can integrate data from yield monitors, soil sensors, and financial records into a single interface, making it easier to identify patterns [19].

- Standardize data formats: Adopt standardized monitoring frameworks and data entry templates to ensure consistency across different experiments and farms [20].

3. How can we handle the high Velocity of real-time sensor data without missing critical events? To manage data velocity, implement a system of automated, real-time alerts.

- Set up monitoring triggers: Configure your system to generate automatic health alerts based on specific thresholds, such as a sudden drop in a vegetation index (e.g., NDVI) or moisture stress detection via satellite thermal bands [20].

- Use predictive modeling: Employ software that uses historical data and real-time inputs to simulate crop performance under different scenarios, helping you anticipate issues before they cause significant damage [19].

4. What methodologies improve data Veracity (quality and reliability) from field sensors? Ensuring data veracity requires proactive quality control and calibration.

- Establish a calibration protocol: Implement a regular schedule for calibrating all field sensors (e.g., soil moisture probes, weather stations) according to manufacturer specifications.

- Implement validation checks: Use scripts or platform features to run data validation checks that flag anomalous readings that fall outside expected physiological or environmental ranges for further investigation.

- Conduct ground-truthing: Periodically verify remote-sensing data and automated alerts with physical field inspections to confirm accuracy and maintain model reliability [20].

5. Our research team lacks advanced technical skills. How can we overcome the Variety of complex data streams? Reducing the technical barrier is key. You should:

- Adopt intuitive platforms: Invest in visual-first dashboards that use color-coded maps and simple scorecards to present complex data in an easily understandable format [20].

- Provide focused training: Develop simple training modules for researchers and technicians that focus on interpreting key data outputs rather than the underlying technical complexities.

- Utilize scorecard systems: Employ systems that aggregate diverse data points into a single, simple health score for each research plot, enabling quick comparison and prioritization [20].

Experimental Protocols

Protocol 1: Implementing a Unified Data Integration and Analysis Pipeline

Objective: To create a standardized methodology for aggregating disparate agricultural data streams (Variety) into a single, analyzable dataset to reduce information overload.

Materials:

- Centralized data platform (e.g., farm management software with open API support)

- Data sources (e.g., IoT soil moisture sensors, drone-based multispectral imagery, weather station data, yield monitor output)

- Ground-truthing kit (soil probes, portable spectrometers, etc.)

Methodology:

- System Auditing: Inventory all data-generating sensors and platforms within the research operation. Document data formats, output intervals (Velocity), and accessibility (e.g., via API, CSV export).

- Platform Configuration: Select and configure a central management platform. Establish connections using open APIs to pull data from each source into a unified database [17].

- Data Standardization: Apply uniform spatial and temporal scales. For example, align all data to a common grid resolution and time-stamp intervals to enable direct correlation.

- Dashboard Development: Create customized dashboards within the platform that visualize integrated data streams. Key views should include:

- A spatial map overlay showing soil nutrient levels against yield data.

- A time-series graph correlating daily rainfall (weather data) with soil moisture readings (IoT sensor data).

- Validation and Calibration: Conduct scheduled ground-truthing exercises. Physically verify automated system alerts (e.g., low NDVI) by visiting field sites and collecting manual measurements to ensure data Veracity [20].

Protocol 2: Quantifying the Impact of Data Velocity on Decision Timeliness

Objective: To measure how the speed of data delivery and processing affects the effectiveness of agricultural interventions.

Materials:

- Real-time monitoring system with alerting capabilities

- Two comparable research plots (Plot A, Plot B)

- Data logging system with timestamps

Methodology:

- Baseline Establishment: Monitor both plots for a baseline period using identical sensors (e.g., for pest detection, moisture stress).

- Intervention Workflow:

- Plot A (Real-Time): Configure the system to send immediate SMS/email alerts to researchers upon detection of a predefined stress threshold (e.g., pest density). Researchers must act on the alert within a set time window (e.g., 4 hours).

- Plot B (Delayed): Implement a 48-hour data processing and reporting delay for Plot B. Researchers can only access the data and act after this delay.

- Data Collection: For each stress event, record:

- Time of stress detection by the sensor system.

- Time of intervention by the research team.

- Outcome metrics (e.g., crop damage percentage, yield impact, cost of intervention).

- Analysis: Compare the average time-to-intervention and the corresponding outcome metrics between Plot A and Plot B. This quantifies the operational cost of data latency (Velocity).

Research Reagent Solutions

The following tools and platforms are essential for constructing a robust research infrastructure capable of handling multi-faceted data overload.

| Research Reagent | Function & Application |

|---|---|

| Open API Platforms | Allows different software and sensor systems to communicate and share data, breaking down proprietary data silos and addressing the Variety challenge [17]. |

| Centralized Farm Management Software (e.g., Agworld, Granular) | Integrates data from multiple sources (yield monitors, soil sensors, financial records) into a single dashboard, providing a unified view to combat information overload [19]. |

| IoT Sensor Networks | Provides high-Velocity real-time data on soil conditions (moisture, temperature, nutrients) and micro-climates, forming the primary data source for precision experiments [19]. |

| Remote Sensing & Satellite Imagery | Delivers high-Variety spatial data on crop health (via NDVI/NDRE), water stress, and biomass at scale, enabling research over large geographical areas [20]. |

| Data Analytics & AI Platforms | Uses machine learning to process extreme Volumes of data, identifying patterns and providing predictive insights or prescriptive recommendations for crop management [19]. |

System Workflow Diagrams

DOT Language Code for Diagrams

Architecting Intelligence: AI, Fusion, and Platforms for Actionable Insights

The Role of AI and Machine Learning in Filtering, Prioritizing, and Interpreting Sensor Data

Frequently Asked Questions (FAQs)

FAQ 1: What are the most effective AI models for processing heterogeneous sensor data in agricultural research?

Convolutional Neural Networks (CNNs) are the most widely used and cost-effective approach for image-based data analysis, such as detecting crop diseases from drone or satellite imagery [21]. For sequential data from in-field sensors, recurrent neural networks (RNNs) or models incorporating Long Short-Term Memory (LSTM) are highly effective for identifying temporal patterns related to soil moisture and microclimate changes [22] [23]. Vision Transformers (ViTs) can exhibit superior accuracy for certain complex image analysis tasks but require significantly higher computational resources [21].

FAQ 2: How can we mitigate data overload from continuous sensor monitoring in large-scale field experiments?

Implement a centralized data aggregation platform that integrates and visualizes data from multiple sources (e.g., satellite, IoT sensors, weather stations) into a single dashboard with actionable insights, rather than presenting raw data streams [20]. Employ AI-driven alert systems that trigger notifications only when sensor readings deviate from predefined baselines or predictive models, shifting focus from constant monitoring to exception-based intervention [20] [23]. Utilize feature selection and dimensionality reduction techniques within your ML pipelines to identify and retain only the most informative data points, thus reducing the volume of data requiring deep analysis [21].

FAQ 3: Our models perform well in the lab but fail in the field. How can we improve robustness?

This is often due to a geographic or environmental bias in training data. To address this, ensure your training datasets incorporate information from a wide variety of geographic locations, soil types, and climatic conditions to improve model generalizability [21]. Continuously collect field data and employ techniques like transfer learning to fine-tune your pre-trained models with smaller, targeted datasets from your specific experimental conditions [22]. Prioritize data quality over quantity; a smaller, well-labeled, and meticulously curated dataset often yields a more robust model than a massive, noisy one [21].

FAQ 4: What methodologies ensure that AI interpretations are accessible to domain experts (e.g., agronomists) without a deep learning background?

Invest in intuitive, visual-first dashboards that present AI-driven insights through color-coded maps, simple health scores, and shareable summary reports, rather than complex statistical outputs [20]. Develop and use standardized monitoring frameworks (e.g., uniform crop health scoring systems) that translate complex ML outputs into agronomically meaningful metrics familiar to researchers and farmers [20]. Integrate model explainability (XAI) techniques into your platform to help the AI system provide reasons for its predictions, such as highlighting the specific image features that led to a disease diagnosis, thereby building trust and understanding [22].

Troubleshooting Guides

Problem: Inaccurate Crop Health Alerts from Satellite and Drone Imagery

Step 1: Verify Data Quality and Preprocessing

- Action: Check for and correct common image artifacts. For satellite data, confirm cloud cover metrics are low. For drone data, ensure consistent altitude and lighting conditions across flights. Apply necessary radiometric and atmospheric corrections to raw imagery.

- Rationale: AI model performance is dependent on input data quality. Uncorrected images can lead to false positives for stress or disease.

Step 2: Recalibrate Ground-Truthing Data

- Action: Conduct targeted field visits to physically verify the conditions in areas flagged by the AI. Compare the AI's health score (e.g., NDVI) with on-the-ground observations of plant vigor, color, and signs of disease or nutrient deficiency.

- Rationale: Models can drift over time. Discrepancies often arise from a mismatch between the model's training data and current field conditions. Your field observations provide the essential labeled data needed to retrain and fine-tune the model [21].

Step 3: Retrain the Model with Augmented Data

- Action: Use your new ground-truthed data to fine-tune the model. Employ data augmentation techniques (e.g., rotating, flipping, adjusting brightness of images) to artificially expand your training dataset and improve model resilience to varying conditions.

- Rationale: This process adapts a generic model to the specific nuances of your experimental fields, significantly improving alert accuracy [22].

Problem: Data Silos from Disparate Sensor Networks (Soil, Weather, Imagery)

Step 1: Audit and Standardize Data Formats

- Action: Document the output formats, communication protocols (e.g., LoRaWAN, NB-IoT, cellular), and sampling frequencies of all deployed sensors (soil moisture, pH, weather stations, etc.) [23].

- Rationale: Incompatible data structures are a primary cause of silos. This audit reveals the scope of the integration challenge.

Step 2: Implement a Centralized Data Ingestion Layer

- Action: Develop or adopt a farm management software platform that can act as a central hub. This platform should support APIs or other connectors to ingest data from your diverse sensor systems and satellite feeds into a unified database [20] [24].

- Rationale: A centralized system is a prerequisite for holistic data analysis and breaks down silos by creating a single source of truth.

Step 3: Synchronize Data Timestamps and Create a Unified View

- Action: Apply data engineering techniques to align all data streams on a common timestamp. This allows the platform to create a unified dashboard where, for example, a drop in soil moisture can be directly correlated with a thermal stress signal from satellite imagery and a change in weather data [22] [23].

- Rationale: Synchronized data enables powerful, multi-modal AI analysis, revealing causal relationships that are invisible when examining single data streams.

Table 1: Performance Metrics of Prevalent AI Models in Agricultural Sensor Data Interpretation

| AI Model/Technique | Primary Application Area | Key Performance Metric | Reported Efficacy / Adoption | Computational Cost |

|---|---|---|---|---|

| Convolutional Neural Networks (CNNs) [21] | Image-based disease detection, crop health monitoring | Accuracy, F1-Score | High accuracy; Most widely used & cost-effective [21] | Moderate |

| Vision Transformers (ViTs) [21] | Advanced image analysis for stress/pest detection | Accuracy | Superior accuracy to CNNs [21] | High |

| Predictive Analytics (e.g., LSTMs) [22] | Yield forecasting, pest/disease outbreak prediction | Forecast Precision, Mean Absolute Error | ~59% adoption in yield forecasting [22] | Moderate to High |

| Sensor Data Fusion & IoT Analytics [23] | Real-time livestock health & environmental monitoring | Early Detection Accuracy, System Uptime | Enables early illness detection; ~90% of users report improved herd progress [23] | Varies with sensor density |

Table 2: Key Agricultural Sensor Types and AI Interpretation Functions

| Sensor Type | Measured Parameters | AI's Primary Filtering/Prioritization Role | Common Data Output Format |

|---|---|---|---|

| Multispectral / Hyperspectral [24] | NDVI, NDRE, specific light wavelengths | Identifies patterns of stress/deficiency invisible to the human eye; prioritizes areas needing intervention. | GeoTIFF, raster data arrays |

| Soil Moisture & Nutrient Sensors [24] | Volumetric water content, NPK levels, temperature | Filters out minor fluctuations; triggers alerts only when thresholds are breached; guides variable rate irrigation. | Digital (e.g., JSON, CSV) via LoRaWAN/cellular [23] |

| Vital Sign Monitoring (Livestock) [23] | Body temperature, heart rate, activity levels | establishes behavioral baselines; prioritizes animals showing abnormal patterns for early disease detection. | Digital time-series data |

| GPS & Location Trackers [23] | Animal movement, grazing patterns, equipment location | Creates geofences; alerts on unusual movement; optimizes logistics and pasture rotation. | GPS coordinates (NMEA) |

Experimental Protocol: AI-Assisted Sensor Fusion for Early Blight Detection

- Objective: To develop a robust ML model for the early detection of tomato late blight by fusing data from soil sensors, weather stations, and drone-based multispectral imagery.

- Sensor Deployment:

- Deploy soil moisture and temperature sensors at a depth of 10cm in a grid pattern across the experimental tomato field.

- Install a local weather station to monitor air temperature, relative humidity, rainfall, and leaf wetness.

- Schedule weekly drone flights equipped with a multispectral camera to capture NDVI and thermal data.

- Data Collection & Preprocessing:

- Collect data from all sensors and the drone for a full growing season, logging readings at 15-minute intervals.

- Manually scout and label areas in the field for the presence/absence and severity of late blight on a weekly basis. This serves as the ground-truth data.

- Synchronize all data streams by timestamp and geolocation. Extract features from the data, such as "48-hour average humidity > 90%" or "NDVI decrease of >0.1 in one week."

- Model Training & Validation:

- Train a hybrid CNN-LSTM model. The CNN processes the multispectral imagery, while the LSTM models the temporal sequence of soil and weather data.

- Use 70% of the synchronized and labeled data for training. Reserve 30% for testing.

- The model's output is a probability score for late blight occurrence for each 1m x 1m grid cell in the field.

- Interpretation & Action:

- The model generates a risk map overlay on a field map. Areas with a probability score above 85% are flagged as high-risk and highlighted for immediate scouting and potential intervention.

- The model's accuracy is continuously validated against subsequent manual scouting reports.

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for an AI-Driven Agricultural Sensor Project

| Item / Solution | Function in the Experimental Context | Specification / Notes |

|---|---|---|

| Multispectral Sensor System (e.g., on Drone/UAV) | Captures non-visible light wavelengths (e.g., Red-Edge, NIR) to calculate vegetation indices like NDVI and NDRE for early stress detection [24]. | Critical for creating labeled image datasets to train computer vision models for crop health monitoring. |

| In-Field IoT Sensor Network | Measures real-time, location-specific parameters like soil moisture, temperature, electrical conductivity (nutrient level), and ambient microclimate [23] [24]. | Provides the temporal data stream for AI models to learn environmental correlations with plant health. LPWAN (e.g., LoRaWAN) is ideal for remote areas [23]. |

| Centralized Farm Management Software Platform | Acts as the data aggregation and visualization hub, integrating satellite, drone, and IoT sensor data for a unified view and AI-driven analytics [20] [22]. | Look for platforms with API access for custom data export and model integration. Essential for breaking down data silos. |

| GPS/GNSS Receiver | Provides precise geolocation for all data points, enabling the creation of accurate field maps and ensuring data from different sources can be aligned spatially [24]. | Centimeter-level accuracy is required for variable rate application and precise correlation of sensor readings. |

| Labeled Field Scouting Dataset | The "ground truth" data collected by human experts (e.g., agronomists) that is used to train, validate, and fine-tune AI models [21]. | Quality is paramount. Must be meticulously collected, standardized, and synchronized with sensor data timestamps. |

| Eleutheroside C | Eleutheroside C (CAS 15486-24-5) - For Research Use Only | High-purity Eleutheroside C, a triterpene compound from Eleutherococcus senticosus. For research applications only. Not for human consumption. |

| Anhydrolutein III | Anhydrolutein III | C40H54O | | High-purity Anhydrolutein III (Deoxylutein I) for research. Explore its role as a carotenoid. This product is For Research Use Only. Not for human or veterinary use. |

System Architecture and Workflow Diagrams

Troubleshooting Common Data Fusion Issues

FAQ: How can I deal with inconsistent or conflicting data from different sources (e.g., satellite vs. drone)?

| Issue | Possible Cause | Solution |

|---|---|---|

| Data Misalignment | Differing spatial resolutions, coordinate systems, or collection times. | Ensure proper georeferencing and data registration as a first processing step [25]. |

| Conflicting Readings | Sensors operate on different scales (proximal, aerial, orbital) with varying accuracies [25]. | Fuse data at the decision level, where each data type is processed separately and results are combined later [25]. |

| Inconsistent Biomass Estimates | Different sensors (e.g., satellite vs. drone) measure different proxies (e.g., NDVI) with varying sensitivities. | Apply data fusion techniques that explore the synergies and complementarities of the different data types to resolve ambiguities [25]. |

| Data Gaps in Satellite Imagery | Cloud cover can block optical satellite sensors for days or weeks [26]. | Deploy IoT field sensors in strategic locations and use their data to extrapolate and "fill in" the missing spatial information [26]. |

FAQ: My system is generating hundreds of alerts, making it hard to identify urgent issues. How can I manage this overload?

Information noise is a common challenge that can hostage a researcher to notifications [14]. Implement a multi-level alert system to prioritize critical issues and reduce information overload [14].

- Define Alert Tiers: Categorize alerts into levels such as "Critical," "Important," and "Informational" based on the severity and urgency of the situation [14].

- Use Dynamic Thresholds: Avoid fixed alert thresholds. Allow them to be adjusted based on factors like crop growth stage, season, or specific experimental goals [14].

- Leverage Intelligent Filters: Configure the system to trigger actionable alerts for specific events, such as an SMS when soil moisture drops below a critical level, while suppressing less important notifications [27].

Experimental Protocols for Robust Data Fusion

This section provides a detailed methodology for a key experiment in agricultural data fusion: creating a continuous, high-resolution crop health map by fusing satellite and IoT data.

Protocol: Fusing Satellite and IoT Data to Overcome Cloud Cover

Objective: To generate a daily, cloud-free map of a key biophysical indicator (e.g., Leaf Area Index) by fusing intermittent satellite imagery with continuous IoT sensor data [26].

Materials and Reagents:

| Item | Function/Specification |

|---|---|

| Optical Satellite Data | Source: Sentinel-2 imagery. Provides high-resolution spatial data (e.g., 10m) for indicators like NDVI and CIgreen every 5 days, cloud-permitting [26]. |

| IoT Field Sensors | Manufacturer: e.g., Bosch. Stationary sensors placed in the field to collect real-time, location-specific data on environmental conditions [26]. |

| Data Processing Platform | A system capable of handling geospatial data, running fusion algorithms (e.g., machine learning models), and spatializing point data from IoT sensors to the field level [26]. |

| Calibration Tools | Tools and methods to ensure IoT sensor data is accurately calibrated against ground truth measurements for reliable extrapolation. |

Methodology:

- Pre-Study and Sensor Deployment: Conduct a preliminary analysis of field heterogeneity using historical data. Place IoT sensors at strategic stationary locations within the field that represent its variability [26].

- Data Collection:

- Continuously collect real-time data from the IoT sensors.

- Download satellite imagery on every available clear-sky date.

- Data Fusion and Modeling:

- On dates with clear satellite imagery, establish a statistical or machine learning model that correlates the ground-level IoT sensor readings with the spatial data from the satellite.

- On days obscured by clouds, use this calibrated model to extrapolate the real-time IoT data points and generate a daily, high-resolution map of the entire field [26].

- Validation: Validate the accuracy of the fused daily maps by comparing them to the next available clear-sky satellite image or through manual ground-truthing.

Workflow Diagram: Satellite-IoT Fusion Process

The following diagram illustrates the logical workflow for the satellite-IoT fusion protocol.

The Researcher's Toolkit: Essential Technologies for Data Fusion

The following table details key technologies and their functions for building a data fusion research platform in precision agriculture.

| Technology / Reagent | Category | Primary Function in Research |

|---|---|---|

| TensorFlow / PyTorch | AI Framework | Provides major libraries for developing and training machine learning and deep learning models for tasks like image analysis and time-series forecasting [28]. |

| OpenCV | Computer Vision | A key library for processing visual data from drones and other imagery, used for tasks like real-time crop disease detection [28]. |

| Convolutional Neural Networks (CNNs) | Algorithm | Particularly effective for analyzing image data from drones and satellites to identify crop stress, pests, or nutrient deficiencies [28]. |

| Recurrent Neural Networks (RNNs/LSTM) | Algorithm | Ideal for time-series forecasting, such as predicting crop yields based on historical sensor and weather data [28]. |

| Kalman Filter | Algorithm | A mathematical algorithm that estimates the state of a dynamic system (e.g., a drone's position) from noisy sensor measurements, crucial for navigation and data integration [29]. |

| LoRaWAN / NB-IoT | Connectivity | Low-power, wide-area network protocols used to connect IoT sensors across expansive rural areas where cellular coverage may be weak [28] [27]. |

| MQTT | Connectivity | A lightweight messaging protocol ideal for transmitting data from field sensors and equipment to a central platform with low bandwidth usage [28]. |

| PostgreSQL (PostGIS) | Data Handling | A spatial database extension that enables advanced storage and querying of geospatial data [28]. |

| QGIS / ArcGIS | GIS Tool | Software for advanced geospatial analysis, mapping fields, and understanding soil variability and crop performance [28]. |

| Magnolignan A | Magnolignan A | Magnolignan A is a bioactive lignan for cancer and neurology research. This product is for Research Use Only. Not for human or diagnostic use. |

| Araloside VII | Araloside VII, MF:C54H88O24, MW:1121.3 g/mol | Chemical Reagent |

System Architecture and Data Flow Diagram

A robust technical architecture is vital for managing data from source to insight. The following diagram outlines the core components and data flow of an integrated agricultural monitoring system.

Leveraging Open APIs and Interoperability Standards for Seamless Data Flow

Technical Support & Troubleshooting FAQs

General Integration Issues

Q: What are the first steps to integrate my existing sensor data with an open-source agriculture platform via its API?

A: Begin by verifying that your sensors and data logger can output data in a standardized format. Many open-source platforms support common interoperability standards like ISO 11783 (for machinery data) and ADAPT for agronomic data, which can be bridged via open APIs [12]. Check your platform's API documentation for specific authentication methods (often API keys or OAuth) and supported data formats like JSON or XML. Initial integration typically involves these steps:

- Use a IoT data logger (e.g., Hawk Pro) that supports flexible sensor integrations and can translate proprietary sensor data into a compatible format [27].

- Configure the API endpoint URL, authentication credentials, and data transmission intervals within your device or data management software.

- Start with a small-scale test, sending data for a single sensor to the platform to verify the connection and data structure before full-scale deployment.

Q: API calls to my precision agriculture platform are failing with authentication errors. What should I check?

A: Authentication errors are often related to incorrect credentials or token configuration. Please verify the following:

- API Key Validity: Ensure the API key is correctly copied and has not expired. Regenerate a new key if necessary.

- Permissions: Confirm that the API key or user account associated with the key has the necessary permissions for the requested actions (e.g., data read, data write).

- Request Headers: Check that your API request includes the authentication key in the correct header field, as specified in the platform's documentation (e.g.,

Authorization: Bearer <your_api_key>). - IP Whitelisting: Some services require your server's IP address to be whitelisted. Confirm if this is a requirement for your API [30].

Q: My sensor data is arriving at the platform, but the values are incorrect or unreadable. How can I fix this data mismatch?

A: This is typically a data formatting or unit discrepancy. To resolve this:

- Review the Data Schema: Consult the API documentation for the exact data schema, including required field names, data types (e.g., string, float, integer), and units of measurement (e.g., Celsius vs. Fahrenheit, volumetric water content %).

- Check Data Translation: If you are using a gateway or data logger, verify its configuration is correctly translating the raw sensor output (e.g., from SDI-12, RS-485, or 4-20mA protocols) into the JSON or XML structure expected by the API [27].

- Validate Data: Use the platform's data validation tools or a staging environment to test and debug the data payload before sending it to the production system.

Data Management and Analysis

Q: How can I manage data flow to avoid being overwhelmed by high-frequency sensor data from my fields?

A: To prevent data overload, implement a strategic data management protocol:

- Set Appropriate Logging Intervals: Configure your IoT data loggers to transmit data at intervals that are meaningful for your research. For soil moisture, this might be every 30 minutes, instead of every minute [27].

- Leverage On-Device Processing: Use gateways or loggers that can perform initial data filtering, aggregation (e.g., sending average values), or trigger-based reporting to reduce the volume of data transmitted [27].

- Utilize Platform Features: Employ the data platform's tools to create management zones. This allows you to analyze and act upon aggregated data for specific areas rather than individual data points from thousands of sensors, simplifying decision-making [31].

Q: Can I use open APIs to combine my sensor data with satellite imagery for a more complete analysis?

A: Yes, this is a primary strength of interoperable platforms. Modern precision agriculture platforms are designed for this. You can use their APIs to:

- Pull satellite-derived vegetation indices (like NDVI) for your field boundaries.

- Correlate this satellite data with your in-ground sensor readings (e.g., soil moisture, temperature) from your API data stream.

- Generate unified insights, such as identifying if poor crop health in a satellite image is correlated with low soil moisture in that specific zone [32] [31].

Connectivity and Hardware

Q: I am conducting research in a remote area with poor cellular connectivity. What are my options for reliable data flow?

A: For off-grid or remote locations, consider these connectivity options, which can be configured in your data loggers:

- Low-Power Wide-Area Networks (LPWAN): Protocols like LoRaWAN offer very long-range and low-power connectivity, though they may require setting up a private gateway [27].

- Satellite Connectivity: For areas with no cellular coverage, satellite communicators can be integrated to transmit data.

- Store-and-Forward: Ensure your data logger has sufficient memory to store data locally when a connection is lost and automatically transmit the backlog once connectivity is restored [27].

Q: The battery in my remote field sensor node is depleting faster than expected. What could be the cause?

A: Rapid battery drain is often due to transmission frequency or power settings.

- Transmission Interval: The most significant factor. Reduce the frequency of data transmission and cellular network registration intervals in the device configuration.

- Power-Saving Mode: Enable deep sleep or power-saving modes on the data logger between transmission cycles.

- Solar Panel: For long-term deployments, integrate a small solar panel to continuously charge the battery and power the system [27].

Experimental Protocols for Data Integration

Protocol 1: Establishing a Multi-Sensor IoT Network for Soil Monitoring

Objective: To deploy a resilient IoT sensor network for collecting real-time soil data and streaming it to an analysis platform via an open API.

Materials:

- Soil moisture sensors (SDI-12 or RS-485 recommended)

- Hawk Pro IoT Data Logger or equivalent [27]

- Temperature and soil temperature sensors

- Power source (e.g., solar panel kit with battery)

- SIM card with cellular data plan (e.g., LTE-M)

Methodology:

- Sensor Selection & Calibration: Select sensors compatible with your soil type and the data logger's I/O architecture (e.g., SDI-12, RS-485). Calibrate sensors according to manufacturer instructions [27].

- Field Deployment: Install sensors at representative locations and multiple depths (e.g., 15cm and 45cm) within the root zone. Bury the sensors to ensure good soil contact.

- Hardware Configuration: Connect sensors to the Hawk Pro data logger. Configure the logger to recognize each sensor and its measurement parameters.

- Connectivity & Power Setup: Install the SIM card and connect the solar panel. Secely mount the enclosure in a location that maximizes sun exposure and signal strength.

- API Integration:

- In the platform's web interface, generate an API key with write permissions.

- In the Hawk Pro's configuration (via Device Manager), input the API endpoint URL, authentication key, and set the desired data transmission interval.

- Define the JSON structure that maps sensor data to the API's expected fields.

- Validation: Allow the system to run for 24-48 hours. Verify in the platform that data is being received correctly and that the values fall within expected ranges.

Protocol 2: Implementing Trigger-Based Automation for Irrigation Control

Objective: To create a closed-loop system where soil sensor data automatically triggers irrigation responses via API calls.

Materials:

- Functional IoT soil moisture sensor network (from Protocol 1)

- Internet-connected irrigation controller that accepts API commands or a relay that can be triggered via API.

- Access to a workflow automation tool (e.g., IFTTT, Zapier, or a custom script on a server).

Methodology:

- Define Thresholds: Establish soil moisture set points. For example, trigger irrigation when moisture in the topsoil drops below 15% and stop when it reaches 25% [27].

- Configure Webhooks/Alerts: In your data platform, set up a webhook or alert that sends an HTTP POST request to your automation tool's endpoint when the threshold is breached.

- Build the Automation Workflow:

- Trigger: The webhook from the data platform.

- Action: An API call to the irrigation controller to start or stop a specific zone.

- Safety Testing: Implement a failsafe, such as a maximum run time or a manual override. Test the system extensively under supervision to ensure it responds correctly to various conditions.

Data Presentation

Table 1: Quantitative Impact of IoT and Open Data in Agriculture

| Metric | Baseline / Problem | Outcome with IoT & Open Data | Data Source / Context |

|---|---|---|---|

| Water Use Efficiency | Up to 60% of water wasted due to runoff and overwatering [27]. | Significant reduction in water consumption via precision irrigation [27]. | IoT soil moisture sensor networks [27]. |

| Data Update Frequency | Manual collection (days/weeks) or outdated public forecasts [27]. | Satellite imagery updates every 5-7 days; sensor data in real-time [27] [31]. | Precision agriculture platforms (e.g., GeoPard) [31]. |

| Adoption & Collaboration | Data silos and proprietary systems hinder collaboration [12]. | GODAN initiative with a network of partners promoting open data since 2013 [12]. | Global Open Data for Agriculture and Nutrition (GODAN) [12]. |

Research Reagent Solutions

Table 2: Essential "Reagents" for Agricultural Data Interoperability Experiments

| Item | Function in the "Experiment" |

|---|---|

| IoT Data Logger (e.g., Hawk Pro) | The core "catalyst," interfaces with physical sensors, translates proprietary data into standard formats, and manages data transmission via cellular networks [27]. |

| Open APIs (Application Programming Interfaces) | The "reaction vessel" where integration occurs. Allows different software systems (sensors, platforms) to communicate and exchange data seamlessly [31] [12]. |

| Interoperability Standards (e.g., ADAPT, ISO 11783) | The "standardized buffer solution," providing common data models and formats to ensure data from disparate sources can be understood and used cohesively [12]. |

| Open-Source Platform (e.g., FarmOS) | The "base solution," providing a transparent and customizable environment for managing, visualizing, and analyzing agricultural data without proprietary restrictions [12]. |

System Architecture Diagrams

Open API Data Flow in Agriculture

Data Overload Mitigation Strategy

Implementing Edge Computing and Cloud Platforms for Scalable Data Handling

Technical Support Center: FAQs & Troubleshooting Guides

Frequently Asked Questions (FAQs)

Q1: What are the primary technical benefits of using Edge Computing for precision agriculture sensor systems?

Edge Computing provides three core technical benefits that directly address data overload in agricultural research:

- Low-Latency Response: Enables millisecond-level decision-making for time-critical tasks such as real-time adjustment of seeding equipment or plant phenotypic feature extraction by processing data directly at the source, eliminating cloud transmission delays [33].

- Bandwidth Optimization: Significantly reduces the volume of data sent to the cloud through local preprocessing, feature extraction, and data compression. This is crucial for managing data from bandwidth-intensive sources like UAV high-resolution imagery [33].

- Data Sovereignty & Robustness: Keeps sensitive or raw sensor data localized, enhancing security and ensuring continuous system operation even in remote areas with limited or intermittent internet connectivity [33] [34].

Q2: How does a "Boundless Automation" vision help in managing data overload?

A Boundless Automation vision, as described by Emerson, advocates for a seamlessly integrated data infrastructure that breaks down data silos [35]. It enables:

- Contextualized Data at Source: Modern intelligent sensors (e.g., wireless vibration monitors) don't just provide raw data; they automatically analyze it to deliver actionable information like specific fault alerts (imbalance, impacting), which drastically reduces the data overhead and expertise needed for interpretation [35].

- Democratization of Data: By presenting information from multiple devices in a single, intuitive dashboard, it prevents researchers from having to sift through disparate applications and data streams, saving time and reducing cognitive load [35].

Q3: What is the strategic difference between Edge Computing and Cloud Computing in a scalable data architecture?

Edge and Cloud Computing serve complementary roles in a scalable architecture, as outlined in the table below.

| Feature | Edge Computing | Cloud Computing |

|---|---|---|

| Primary Role | Real-time control, low-latency processing, data filtering [33] [34] | Large-scale data storage, long-term analysis, model training [36] |

| Latency | Low to ultra-low [34] | Higher, due to data transmission |

| Data Volume Handled | Processes and filters high-frequency raw data, sending only relevant events/insights [37] | Stores and processes massive, aggregated datasets from multiple edge nodes [36] |

| Connectivity Dependence | Can operate with limited or no connectivity [33] | Requires reliable internet connection |

| Best for | Autonomous machinery control, real-time pest detection, immediate anomaly alerts [38] [33] | Big data analytics, trend forecasting, global system monitoring, and collaborative research platforms [36] |

Q4: What are the foundational principles for designing a scalable cloud infrastructure?

Designing a scalable cloud infrastructure involves key architectural patterns [39]:

- Microservices & Loose Coupling: Architecting your application as a collection of independent, fine-grained services (microservices) allows you to scale individual components based on demand, rather than the entire monolithic application.

- Horizontal Scaling (Scale-Out): Adding more instances of a resource (e.g., servers, database nodes) to distribute the workload, often managed automatically with load balancers and autoscalers.

- Stateless Applications: Designing applications so that client requests are independent and do not rely on stored session data on the server. This makes it easy to distribute requests across any available instance.

- Infrastructure as Code (IaC): Automating the provisioning and management of your cloud infrastructure using code (e.g., with Terraform). This ensures environments are consistent, reproducible, and can be scaled rapidly without manual intervention [39].

Troubleshooting Guides

Issue 1: System Performance Degradation Due to Data Overload

Symptoms:

Diagnosis & Resolution:

- Implement Edge Data Filtering: Shift from collecting all data to an event-driven or threshold-based strategy. Configure edge devices to process data locally and transmit only exceptional events (e.g., a temperature sensor sending data only when a predefined safe range is exceeded) [37].

- Adopt a Use-Case Driven Data Strategy: Before deployment, define specific use cases. For example: "Alert the researcher when soil moisture in Sector B drops below 15%." This forces the collection of only relevant data points, preventing "database bloat" [40].

- Assign Value to Parameters: For every data point collected, ask how it improves safety, efficiency, or provides a specific business insight. If a parameter lacks a clear value proposition, do not upload it to the cloud [40].

Issue 2: Connectivity and Latency Challenges in Remote Field Environments

Symptoms:

- Delayed or failed control commands to autonomous agricultural machinery.

- Inability to perform real-time analytics (e.g., crop health monitoring from a drone feed).

- Data synchronization failures between field devices and the central cloud repository.

Diagnosis & Resolution:

- Verify Edge Node Autonomy: Ensure that edge nodes (e.g., on machinery or local gateways) are equipped with pre-deployed lightweight AI models capable of performing core decision-making without a cloud connection. This maintains operation during network outages [33].

- Review Edge-Cloud Workload Distribution: Offload all time-critical processing tasks to the edge. The cloud should be used for non-real-time, computationally intensive tasks like long-term performance degradation prediction and large-scale historical data analysis [33].

- Explore Hybrid Connectivity Solutions: In areas with poor terrestrial networks, investigate hybrid solutions that combine local wireless networks (e.g., LoRaWAN) with satellite backup for critical data transmission [38].

Experimental Protocols & Methodologies

Protocol 1: Implementing a Smart Irrigation System with Edge-Based Control

This protocol outlines the steps to deploy a sensor system that optimizes water usage by processing data at the edge.

- Objective: To reduce water usage by triggering irrigation only when and where it is needed, based on real-time soil condition analysis at the edge.

- Research Reagent Solutions & Essential Materials:

| Item | Function |

|---|---|

| AMS Wireless Vibration Monitor | An example of an intelligent wireless sensor that provides contextualized machinery health data, demonstrating the principle of moving beyond raw data [35]. |

| Edge Computing Node/Gateway | A local device (e.g., a ruggedized server) with processing capabilities to run the irrigation control algorithm [33]. |

| Soil Moisture & Nutrient Sensors | Deployed in the field to collect raw data on soil conditions [38] [33]. |

| Multispectral Imaging Sensor (UAV-mounted) | Captures high-resolution images of crop canopy for health assessment [38]. |

| Lightweight AI Model | A pre-trained, efficient model for analyzing sensor data and making irrigation decisions locally [33]. |

- Methodology:

- Sensor Deployment: Install a network of soil moisture sensors at various depths and locations across the field.

- Algorithm Deployment: Load a lightweight decision-making algorithm onto the edge gateway. This algorithm is calibrated for the specific crop and soil type.

- Local Processing & Control:

- Soil moisture sensors continuously send raw data to the edge gateway.

- The gateway processes this data in real-time, comparing it to predefined optimal moisture thresholds.

- If the soil moisture falls below the threshold, the gateway sends an immediate command to the automated irrigation system in the corresponding sector, without relaying the raw sensor data to the cloud.

- Cloud Synchronization: The edge gateway periodically sends only a summary report (e.g., "Sector A irrigated for 5 minutes on 2025-11-25") to the cloud for long-term storage and trend analysis.

The workflow for this protocol is as follows:

Protocol 2: Establishing a Scalable Cloud Architecture for Agricultural Data

This protocol describes how to set up a resilient and scalable cloud platform to handle data ingested from multiple edge nodes.

- Objective: To create a cloud backend that can elastically scale to accommodate data from numerous field deployments, enabling large-scale analytics and collaboration.

- Methodology:

- Adopt Microservices Architecture: Decompose the cloud application into small, independent services (e.g., a data ingestion service, a query service, a visualization service). This allows each service to be scaled independently based on load [39].

- Implement Infrastructure as Code (IaC): Use tools like Terraform or Google Cloud Deployment Manager to define the entire cloud infrastructure (networking, databases, compute instances) in code. This allows for version control, easy replication of environments for different research groups, and automated, error-free scaling [39].

- Configure Autoscaling and Load Balancing:

- For compute resources (e.g., virtual machines, Kubernetes clusters), configure autoscaling policies based on metrics like CPU utilization or request rate [39].

- Place a global load balancer in front of the services to distribute incoming traffic evenly across healthy backend instances, preventing any single resource from being overwhelmed [39].

- Utilize Scalable Database Services: Choose managed cloud database services designed for massive scale, such as Google BigQuery or Spanner. These offer built-in replication, fault tolerance, and consistent performance as data volumes grow [39].

The logical relationship of this cloud architecture is shown below:

Precision agriculture research generates vast amounts of data from various sensor systems, including satellite imagery, IoT soil sensors, weather stations, and drone-based surveillance. This data deluge presents a significant challenge for researchers and scientists, who must integrate, interpret, and act upon fragmented information streams to optimize agricultural experiments, crop development, and sustainable farming practices. The core problem lies in managing disparate data sources that lead to operational inefficiencies, lack of real-time insights, and difficulty in scaling research protocols across diverse agricultural environments [41] [20].

Unified dashboards and AI-driven advisory systems have emerged as transformative solutions to these challenges, providing centralized platforms that consolidate operational visibility and enable predictive analytics. These systems address critical research bottlenecks by offering:

- Centralized Data Integration: Combining multi-source agricultural data into single-pane visibility [41]

- Real-Time Monitoring: Enabling immediate response to crop stress, pest damage, or environmental changes [20]

- Predictive Analytics: Leveraging historical and real-time data for yield forecasting and risk assessment [20]

- Automated Workflows: Streamlining experimental protocols and data collection processes [41]

This case study examines successful implementations of these technologies, providing researchers with practical frameworks for addressing data overload in agricultural sensor systems research.

Real-World Success Stories

Large-Scale Agricultural Monitoring Platform

Challenge: A major agricultural research institution faced difficulties monitoring hundreds of experimental plots across fragmented geographical locations. Physical site visits were time-consuming, expensive, and failed to provide timely data for intervention decisions [20].

Solution: Implementation of a unified agricultural dashboard featuring:

- Satellite-based remote sensing with NDVI and NDRE vegetation indices

- Real-time health alerts for stress detection

- Centralized farm-level dashboards with performance scoring

- Automated boundary detection for experimental plots [20]

Results:

- Reduced field visit requirements by 65% through targeted interventions

- Achieved near-real-time detection of pest damage and drought stress

- Enabled standardized monitoring protocols across all research sites

- Scalable monitoring of numerous small plot experiments simultaneously [20]

AI-Optimized Research Station Operations

Challenge: A direct-to-consumer agricultural research group (Laverne) experienced slow experimental cycles (4-6 days per protocol) and inconsistent data quality from third-party monitoring services [41].

Solution: Deployment of an end-to-end experimental management system featuring:

- Unified dashboard for real-time experiment tracking

- Automated sensor data collection and integration

- AI-driven resource allocation for field operations

- Integrated warehouse and transport management [41]

Results:

- Reduced protocol-to-data collection time to 2-3 hours for critical metrics

- Achieved 100% data accuracy post-implementation

- Significant cost savings by switching from third-party to integrated monitoring

- Increased research throughput by 45% through workflow optimization [41]

Multi-Site Agricultural Research Management

Challenge: A MENA-based agricultural research organization struggled with managing multiple experimental stations using different protocols, data formats, and monitoring systems, creating inconsistencies in research outcomes [41].

Solution: Implementation of an AI-powered unified dashboard providing:

- Centralized inventory management of research materials

- Smart resource allocation across experimental sites

- Real-time data synchronization across all research stations

- Predictive analytics for experimental outcome forecasting [41]

Results:

- Automated tracking of materials across multiple research facilities

- 30% reduction in resource shortages through predictive forecasting

- Seamless integration of point-of-sale systems for experimental yield tracking

- Standardized data collection protocols across all research sites [41]

Quantitative Performance Analysis

Table 1: Performance Metrics of Unified Dashboard Implementations

| Implementation Case | Operational Efficiency Gain | Data Accuracy Improvement | Cost Reduction | Time Savings |

|---|---|---|---|---|

| Large-Scale Agricultural Monitoring | 65% reduction in physical site visits | Real-time detection capability | Not specified | Near-real-time intervention |

| AI-Optimized Research Station | 45% throughput increase | 100% post-implementation | Significant savings (millions) | 2-3 hours (from 4-6 days) |

| Multi-Site Research Management | 30% reduction in resource shortages | Standardized cross-site data | Not specified | Streamlined protocols |

Table 2: AI Troubleshooting Efficacy in Agricultural Research Systems

| Problem Category | Frequency (%) | Resolution Rate | Average Resolution Time |

|---|---|---|---|

| Input & Context Issues | 60% | 92% | 2 minutes |

| Model Configuration | 25% | 88% | 5 minutes |

| Output Processing | 10% | 85% | 3 minutes |