Comparative Performance of Plant Biosystems Design Approaches: From Theoretical Frameworks to Biomedical Applications

This article provides a comprehensive analysis of the performance and efficacy of various plant biosystems design approaches, addressing the critical need for advanced plant engineering strategies.

Comparative Performance of Plant Biosystems Design Approaches: From Theoretical Frameworks to Biomedical Applications

Abstract

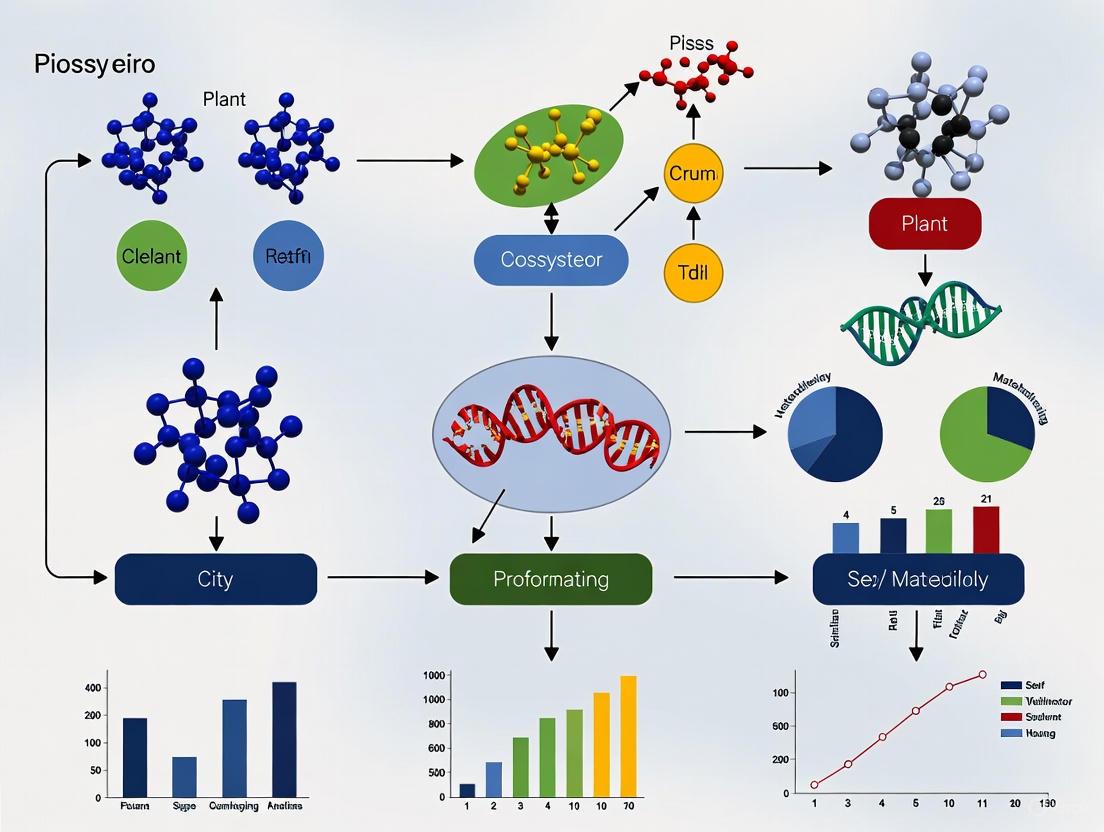

This article provides a comprehensive analysis of the performance and efficacy of various plant biosystems design approaches, addressing the critical need for advanced plant engineering strategies. We examine foundational theories including graph theory applications and mechanistic modeling for predicting plant system behavior. The review covers cutting-edge methodologies such as genome editing, genetic circuit engineering, and de novo genome synthesis, alongside practical optimization strategies for troubleshooting common experimental challenges. Through comparative validation of different biosystems design frameworks, we assess their relative strengths in precision, efficiency, and scalability. This work synthesizes critical insights for researchers and drug development professionals seeking to leverage plant biosystems design for biomedical innovation, sustainable therapeutic production, and clinical applications.

Theoretical Foundations and Emerging Paradigms in Plant Biosystems Design

In the evolving field of plant biosystems design, graph theory has emerged as a fundamental mathematical framework for unraveling the complexity of biological systems. Graph theory provides powerful computational approaches to represent and analyze complex networks of molecular interactions, enabling researchers to move beyond single-gene studies to a systems-level understanding. A plant biosystem can be formally defined as a dynamic network of genes and multiple intermediate molecular phenotypes—such as proteins and metabolites—distributed across four dimensions: three spatial dimensions of cellular and tissue structure, and one temporal dimension encompassing developmental stages and circadian rhythms [1].

The application of graph theory to plant gene-metabolite networks allows researchers to address fundamental challenges in plant metabolic engineering. Unlike prokaryotic systems, plant metabolism features extraordinary complexity with highly branched pathways, extensive compartmentalization within organelles, and sophisticated regulatory circuits [2]. This complexity has traditionally impeded engineering efforts, as manipulating single enzymes often yields disappointing results due to distributed flux control across multiple pathway steps [2]. Graph-theoretic approaches provide a mathematical foundation to overcome these limitations by offering a holistic framework for modeling, predicting, and redesigning plant metabolic systems.

Theoretical Foundations and Network Components

Graph Theory Principles for Biological Networks

In graph theory applied to biological systems, networks are formally defined as graphs ( G = (V, E) ), where ( V ) represents a set of vertices (nodes) and ( E ) represents a set of edges (connections) [3]. In plant gene-metabolite networks, nodes typically represent biological entities including genes, transcripts, proteins, and metabolites, while edges represent the functional relationships between them, such as regulatory interactions, biochemical conversions, or physical associations [1] [3]. The mathematical rigor of this representation enables the application of sophisticated analytical tools to biological problems.

Biological networks can be categorized into several types based on their structural properties. Undirected graphs represent symmetric relationships, such as protein-protein interactions, while directed graphs capture asymmetric relationships like regulatory interactions where a transcription factor regulates a target gene [3]. Weighted graphs incorporate quantitative measures of interaction strength or reliability, which is particularly valuable for integrating multi-omics data [3]. For plant biosystems design, these formal representations enable researchers to move from qualitative descriptions to quantitative, predictive models of cellular behavior.

Key Network Motifs in Plant Systems

Network motifs are statistically overrepresented subgraphs that serve as fundamental building blocks of complex biological networks [1]. In plant gene-metabolite networks, two primary classes of motifs play crucial regulatory roles: feed-forward loops and feed-back loops [1]. Feed-forward loops consist of three nodes connected in a pattern where a master regulator controls both a target gene and an intermediate regulator that also controls the target. Feed-back loops form circular connections where nodes influence each other either directly or through intermediates, creating regulatory circuits that enable homeostasis or bistable switching.

These motifs represent the basic computational units of biological systems, performing specific dynamic functions. For example, coherent feed-forward loops can create delay pulses in signal transduction, while incoherent feed-forward loops can generate accelerated responses or pulse generation [1]. Negative feedback loops enable precise homeostasis and robustness to perturbation, while positive feedback loops can create bistable switches for developmental transitions. In plant specialized metabolism, these motifs often underlie the complex regulatory circuits that control the production of valuable compounds such as alkaloids, flavonoids, and terpenoids [4] [5].

Table 1: Classification of Network Motifs in Plant Gene-Metabolite Systems

| Motif Type | Structural Pattern | Functional Role | Examples in Plant Metabolism |

|---|---|---|---|

| Feed-forward Loop | Master regulator controls target through direct and indirect paths | Temporal processing; pulse generation | Regulation of phenylpropanoid pathway genes |

| Feedback Loop | Output influences its own production | Homeostasis; bistable switching | Sugar signaling in photosynthetic control |

| Single-input Module | Single regulator controls multiple targets | Coordinated expression | Gene clusters for specialized metabolites |

| Dense Overlapping Regulon | Multiple regulators control multiple targets | Combinatorial control | Response to environmental signals |

Comparative Analysis of Modeling Approaches

Correlation-Based Network Analysis

Correlation-based network approaches represent a widely used method for reconstructing gene-metabolite networks from high-throughput omics data. This methodology relies on statistical associations—typically calculated using Pearson correlation, mutual information, or Spearman rank correlation—to infer potential regulatory relationships between genes and metabolites [5]. The fundamental premise is that functionally related molecular entities exhibit coordinated abundance patterns across different experimental conditions, developmental stages, or genotypes.

The experimental workflow for correlation-based network analysis begins with comprehensive data collection using transcriptomics (RNA-Seq) and metabolomics (LC-MS/GC-MS) platforms applied to samples representing biological variation [6] [5]. Following data preprocessing and normalization, pairwise correlation matrices are computed between all gene-metabolite pairs. Statistical thresholds are then applied to identify significant associations, which are assembled into network representations. The resulting networks can be further refined using graph clustering algorithms to identify densely connected modules that represent functional units [5]. A key advantage of this approach is its ability to generate hypotheses from untargeted omics data without prior knowledge of pathway architecture.

Table 2: Performance Comparison of Network Modeling Approaches

| Analytical Feature | Correlation Networks | Genome-Scale Models (GEMs) | Integrated Hybrid Models |

|---|---|---|---|

| Data Requirements | Transcriptomics + Metabolomics | Genome annotation + Biochemical data | Multi-omics + Kinetic parameters |

| Network Construction | Statistical inference from correlation | Knowledge-based curation from databases | Combined statistical & mechanistic modeling |

| Predictive Capabilities | Medium (associative relationships) | High (flux predictions at steady state) | High (dynamic & mechanistic) |

| Regulatory Insight | Identification of co-expression modules | Limited without integration of regulatory networks | Comprehensive including regulation |

| Experimental Validation Rate | 20-40% for top predictions [5] | 60-80% for metabolic engineering targets [1] | 70-90% for engineered pathways [1] |

| Computational Complexity | Medium | High | Very High |

Constraint-Based Genome-Scale Modeling

Constraint-based genome-scale metabolic modeling (GEM) represents a fundamentally different approach based on biochemical principles and mass conservation laws [1]. Unlike correlation-based methods, GEMs are constructed from meticulously curated biochemical knowledge of enzymatic reactions, substrate stoichiometries, and compartmentalization. The core mathematical framework represents metabolism as a stoichiometric matrix ( S ) where rows correspond to metabolites and columns represent biochemical reactions. The system is constrained by mass balance equations ( S \cdot v = 0 ), where ( v ) is the flux vector of reaction rates.

The analytical power of GEMs stems from their ability to predict phenotypic capabilities under different genetic and environmental conditions using methods such as Flux Balance Analysis (FBA) [1]. FBA identifies flux distributions that optimize cellular objectives—typically biomass production or synthesis of target compounds—within physicochemical constraints. For plant biosystems design, GEMs have been successfully applied to engineer central metabolism, photosynthetic efficiency, and the production of valuable natural products in species including Arabidopsis, rice, and tomato [2] [1]. The first plant GEM was developed for Arabidopsis over a decade ago, and today there are 35 published GEMs for more than 10 seed plant species [1].

Experimental Protocols and Workflow

Integrated Multi-Omics Network Analysis Protocol

The reconstruction of plant gene-metabolite networks requires a systematic workflow integrating multiple experimental and computational phases. A robust protocol begins with sample collection across multiple biological conditions—such as different tissues, developmental stages, or environmental treatments—to capture sufficient biological variation for correlation analysis [6] [5]. Tissues are immediately flash-frozen in liquid nitrogen to preserve metabolic profiles, followed by simultaneous extraction of RNA for transcriptomics and metabolites for metabolomics analysis.

For transcriptome profiling, RNA sequencing (RNA-Seq) is performed using standard library preparation protocols with sequencing depth of 20-50 million reads per sample to ensure adequate coverage of both highly and lowly expressed transcripts [5]. For metabolome analysis, complementary LC-MS and GC-MS platforms are employed to achieve broad coverage of metabolites with diverse physicochemical properties [6]. LC-MS is ideal for non-volatile compounds like flavonoids and alkaloids, while GC-MS provides superior analysis of volatile terpenes and primary metabolites. Data preprocessing includes peak detection, alignment, and annotation using mass spectral libraries, followed by normalization to account for technical variation.

The core computational workflow involves correlation calculation using appropriate statistical measures, followed by network reconstruction and module detection using graph clustering algorithms [5]. The resulting network modules are then functionally annotated through enrichment analysis and integrated with prior knowledge from databases such as KEGG, PlantCyc, and MetaCrop [2]. Candidate genes identified through this process require functional validation through heterologous expression in systems such as Nicotiana benthamiana [4] [5], followed by targeted genetic manipulation in the plant of interest using CRISPR-Cas9 or RNAi approaches [4].

Genome-Scale Metabolic Model Reconstruction Protocol

The construction of genome-scale metabolic models follows a distinct knowledge-based workflow beginning with genome annotation to identify metabolic genes and their enzymatic functions [1]. This initial phase leverages automated annotation pipelines complemented by extensive manual curation to ensure accurate assignment of gene functions, substrate specificity, and subcellular localization. The annotated genome forms the basis for draft model reconstruction by mapping enzymatic reactions to their corresponding genes using biochemical databases such as KEGG, MetaCyc, and PlantCyc [2].

The draft metabolic network is subsequently compartmentalized to reflect plant-specific cellular architecture, including chloroplasts, mitochondria, peroxisomes, vacuoles, and other organelles [2] [1]. This step requires careful assignment of transport reactions to enable metabolite exchange between compartments. The network is then converted into a stoichiometric matrix representation, and mass and charge balance constraints are applied to ensure biochemical consistency. The model is next subjected to gap-filling procedures to identify and rectify network gaps that prevent flux connectivity essential for biomass production.

Model validation employs experimental flux measurements from stable isotope labeling experiments (e.g., ¹³C-labeling) and phenotypic data [1]. The validated model can subsequently be applied for predictive simulations using constraint-based modeling techniques such as Flux Balance Analysis (FBA) to identify gene knockout targets, nutrient optimization strategies, or potential bottlenecks in the synthesis of valuable natural products [2] [1].

Applications in Plant Biosystems Design

Metabolic Engineering of Valuable Plant Natural Products

Graph-theoretic approaches have dramatically accelerated the elucidation and engineering of biosynthetic pathways for valuable plant natural products. Successful applications include the complete decoding of pathways for anticancer compounds such as noscapine, vinblastine, and camptothecin, as well as neuroactive alkaloids including strychnine [5]. These breakthroughs were enabled by integrated omics analyses and network-based candidate gene prioritization. For example, researchers applied co-expression network analysis across 17 different tomato tissues to identify novel genes in the steroidal alkaloid pathway, demonstrating how network-based approaches can efficiently narrow candidate pools from thousands of genes to a manageable number for functional testing [5].

In another landmark application, graph-based analysis of tropane alkaloid biosynthesis combined transcriptomic and metabolomic data from multiple plant tissues to identify candidate genes, which were subsequently validated through heterologous expression in yeast [4]. This integrated approach significantly accelerated pathway discovery by overcoming traditional bottlenecks of labor-intensive genetic screening. Similarly, reconstruction of the diosmin biosynthetic pathway in Nicotiana benthamiana required the coordinated expression of five to six flavonoid pathway enzymes, producing yields up to 37.7 µg/g fresh weight [4]. These case studies demonstrate how network modeling guides the rational engineering of complex plant metabolic pathways rather than relying on random trial-and-error approaches.

Engineering Plant Central Metabolism

Beyond specialized metabolites, graph-theoretic modeling has been successfully applied to optimize plant central metabolism for improved agronomic traits and nutritional quality. Constraint-based models of photosynthetic carbon metabolism have identified strategic enzyme targets for enhancing photosynthetic efficiency and carbon allocation [2]. Similarly, flux balance analysis of sucrose synthesis and the tricarboxylic acid cycle in leaves has revealed non-intuitive strategies for manipulating energy metabolism and respiratory efficiency [2].

Network analysis has also illuminated the distributed control of flux through highly branched biosynthetic pathways, explaining why single-gene manipulations often yield disappointing results [2]. For instance, studies of glutamate decarboxylase (GAD) genes in tomato revealed that five GAD genes contribute to GABA biosynthesis, with two (SlGAD2 and SlGAD3) predominantly expressed during fruit development [4]. CRISPR-Cas9 editing of these two key genes increased GABA accumulation by 7- to 15-fold, demonstrating how network identification of key targets enables successful metabolic engineering [4].

Essential Research Toolkit

Table 3: Essential Computational Tools and Databases for Network Analysis

| Tool/Database | Primary Function | Application Context | Key Features |

|---|---|---|---|

| Cytoscape [7] | Network visualization and analysis | All network types | Customizable visual styles, plugin architecture, data integration |

| ggraph/graphlayouts [8] | Network visualization in R | Correlation networks, GEM visualization | Grammar of graphics integration, publication-quality figures |

| KEGG/PlantCyc [2] | Pathway database and reference | Network annotation, GEM reconstruction | Manually curated pathways, organism-specific databases |

| MetaCrop [2] | Crop metabolism database | GEM reconstruction for crops | Manually curated crop metabolic pathways |

| OrthoFinder [5] | Homology-based gene family analysis | Cross-species network comparison | Accurate orthogroup inference, phylogenetic analysis |

| MaxEnt [9] | Species distribution modeling | Ecological network applications | Environmental variable integration, habitat prediction |

| MixNet [3] | Network connectivity profiling | Module detection in correlation networks | Mixture models for connectivity profiles |

| String DB [3] | Protein-protein interaction database | Network contextualization | Multiple evidence channels, confidence scoring |

Table 4: Experimental Platforms for Network Validation

| Experimental Platform | Primary Application | Key Advantages | Throughput |

|---|---|---|---|

| Nicotiana benthamiana transient expression [4] [5] | Rapid gene function validation | High efficiency, simultaneous multi-gene expression, suitable for plant enzymes | Medium-High |

| Stable plant transformation [4] | In planta functional analysis | Biological context preserved, stable inheritance | Low |

| CRISPR-Cas9 genome editing [4] | Targeted gene knockout/editing | Precision editing, multiplexing capability | Medium |

| Heterologous microbial expression [5] | Enzyme biochemical characterization | Controlled expression, purification facilitation | High |

| LC-MS/GC-MS metabolomics [6] | Metabolic profiling and flux analysis | Comprehensive coverage, quantitative precision | High |

Graph theory has fundamentally transformed our approach to understanding and engineering plant metabolism by providing rigorous mathematical frameworks to represent biological complexity. The comparative analysis presented here demonstrates that correlation-based networks and genome-scale metabolic models offer complementary strengths—the former excelling at hypothesis generation from omics data, and the latter providing mechanistic predictive capabilities for metabolic engineering. Future advances will likely focus on hybrid approaches that integrate these methodologies while incorporating additional data types, including protein-protein interactions, epigenetic modifications, and spatial metabolomics.

The emerging frontier in plant network biology involves the development of dynamic whole-cell models that capture both metabolic and regulatory networks across multiple cellular compartments and tissue types [1]. Realizing this vision will require advances in single-cell omics technologies to resolve network organization at cellular resolution, combined with machine learning approaches to infer network topology from large-scale perturbation data [6] [5]. Additionally, the application of graph neural networks and other geometric deep learning methods promises to enhance our ability to predict network behavior and identify optimal engineering strategies. As these computational approaches mature, they will increasingly guide the rational design of plant biosystems for sustainable production of pharmaceuticals, biomaterials, and resilient crops, ultimately establishing a new paradigm in plant biotechnology.

Mechanistic modeling of cellular metabolism is a fundamental approach in systems biology that seeks to quantitatively understand and predict the behavior of biological systems based on underlying physical and biochemical principles. These models are built upon the law of mass conservation, which provides the mathematical foundation for analyzing metabolic networks by tracking the flow of chemical elements through biological systems. The core principle involves representing metabolism as a network of biochemical reactions where metabolites and reactions serve as nodes and edges, respectively, enabling researchers to decipher the fluxes of chemical elements within plant and microbial systems.

The development of genome-scale metabolic models (GEMs) represents a significant advancement in the field, allowing for system-level predictions of metabolism across diverse organisms. For plant biosystems design, mechanistic modeling provides a critical framework for linking genetic information to phenotypic traits, thereby enabling predictive design of plant systems. These models have evolved from simple pathway analyses to comprehensive genome-scale networks that can simulate an organism's metabolic capabilities under various genetic and environmental conditions. The iterative process of model construction, refinement, and validation has become increasingly sophisticated with the integration of multi-omics data and computational algorithms, making mechanistic modeling an indispensable tool for both basic research and applied biotechnology.

Fundamental Theoretical Frameworks

Mass Conservation and Stoichiometric Analysis

The principle of mass conservation forms the mathematical backbone of constraint-based metabolic modeling, where the rate of change for each metabolite in a network is described by a system of ordinary differential equations. Mathematically, this is expressed as ( \frac{dX}{dt} = S \cdot v ), where ( X ) represents the metabolite concentrations, ( S ) is the stoichiometric matrix containing the coefficients of each metabolite in every reaction, and ( v ) is the vector of metabolic fluxes. Under the quasi-steady-state assumption (QSSA), which exploits the fact that metabolism operates on a faster timescale than regulatory processes, the system simplifies to ( S \cdot v = 0 ). This fundamental equation forms the basis for constraint-based reconstruction and analysis (COBRA) methods that enable quantitative description of cellular phenotypic characteristics.

The stoichiometric matrix ( S ) is a mathematical representation of the metabolic network structure, with rows corresponding to metabolites and columns to reactions. This formulation allows researchers to define the mass balance for each metabolite as the difference between fluxes of producing and consuming reactions. For genome-scale models, this results in a linear algebraic equation where the stoichiometry matrix multiplied by the reaction flux vector yields the production rates of compounds. This constraint-based approach avoids the need for detailed kinetic information, which is often unavailable for most enzymatic reactions, while still capturing the fundamental capabilities and limitations of metabolic networks.

Constraint-Based Modeling and Flux Balance Analysis

Flux Balance Analysis (FBA) has emerged as the primary method for constraint-based analysis of genome-scale metabolic models. FBA predicts metabolic phenotypes by solving for a flux distribution that satisfies the mass balance constraints (( S \cdot v = 0 )) while optimizing a specified cellular objective, typically the maximization of biomass production in microorganisms. The solution space is further constrained by reaction reversibility and known flux measurements, such as nutrient uptake rates. This formulation transforms the biological problem into a linear programming optimization problem that can be efficiently solved computationally.

The predictive power of FBA stems from its ability to analyze metabolic networks without requiring extensive kinetic parameters. However, this approach has limitations in quantitative predictions unless labor-intensive measurements of media uptake fluxes are performed. Recent advances have addressed this limitation through hybrid modeling approaches that integrate machine learning with mechanistic constraints. For plant systems, FBA has been successfully implemented in models such as AraGEM for Arabidopsis thaliana, which contains 1,625 reactions, 1,419 genes, and 1,515 metabolites distributed across multiple cellular compartments.

Table 1: Key Genome-Scale Metabolic Models and Their Components

| Organism | Model Name | Reactions | Genes | Metabolites | Compartments |

|---|---|---|---|---|---|

| Homo sapiens | Recon 2.2 | 7,785 | 1,675 | 2,652 (5,324) | 9 |

| Escherichia coli | iJO1366 | 2,583 | 1,366 | 1,136 (1,805) | 3 |

| Saccharomyces cerevisiae | iTO977 | 1,562 | 977 | 817 (1,353) | 4 |

| Arabidopsis thaliana | AraGEM | 1,625 | 1,419 | 1,515 (1,748) | 6 |

| Mus musculus | iMM1415 | 3,724 | 1,415 | 1,503 (2,774) | 8 |

Comparative Analysis of Modeling Approaches

Classical Constraint-Based Methods

Classical constraint-based methods, including FBA, Flux Variability Analysis (FVA), and Elementary Mode Analysis (EMA), have been extensively applied to model organism metabolic networks. These approaches share the fundamental principle of exploiting stoichiometric, thermodynamic, and capacity constraints to define the feasible solution space for metabolic fluxes. FBA identifies a single optimal flux distribution based on a presumed cellular objective, while FVA determines the range of possible fluxes for each reaction within the feasible space. EMA identifies all minimal functional units within the network that can operate independently.

The performance of these methods varies significantly depending on the organism and environmental conditions. For unicellular organisms like Escherichia coli and Saccharomyces cerevisiae growing in defined media, FBA predictions of growth rates typically achieve accuracy rates of 70-85% when compared with experimental measurements. However, for multicellular systems and complex media conditions, the accuracy decreases substantially to 45-60%, primarily due to inappropriate objective functions and insufficient constraints. A comparative analysis of gene essentiality predictions across multiple models revealed that FBA correctly identified 80% of essential genes in E. coli, but only 65% in Arabidopsis thaliana, highlighting the challenges in modeling eukaryotic systems.

Kinetic Modeling Approaches

Kinetic modeling represents a more detailed approach that incorporates enzyme mechanisms, regulatory interactions, and metabolite concentrations to capture dynamic metabolic behaviors. Unlike constraint-based methods, kinetic models require detailed information on enzyme kinetics and enzyme regulation, which presents significant challenges for genome-scale applications due to parameter uncertainty. The ORACLE (Optimization and Risk Analysis of Complex Living Entities) framework addresses this limitation by constructing large-scale mechanistic kinetic models that investigate the complex interplay between stoichiometry, thermodynamics, and kinetics.

Studies using ORACLE have revealed that enzyme saturation is a critical consideration in metabolic network modeling, as it extends the feasible ranges of metabolic fluxes and metabolite concentrations. This approach suggests that enzymes in metabolic networks have evolved to function at different saturation states to ensure greater flexibility and robustness of cellular metabolism. However, the application of kinetic modeling to plant systems remains limited due to the scarcity of kinetic data for plant-specific enzymes and the computational complexity associated with multi-compartmental models in photosynthetic organisms.

Hybrid Neural-Mechanistic Approaches

Recent advances in machine learning have enabled the development of hybrid neural-mechanistic models that enhance the predictive power of traditional constraint-based approaches. These models embed mechanistic constraints within artificial neural network architectures, creating Artificial Metabolic Networks (AMNs) that can be trained on experimental data. The neural component serves as a preprocessing layer that captures complex relationships between environmental conditions and uptake fluxes, while the mechanistic layer ensures biochemical feasibility.

This hybrid approach systematically outperforms traditional constraint-based models, achieving 25-40% higher accuracy in predicting growth rates of Escherichia coli and Pseudomonas putida across different media conditions. Remarkably, these models require training set sizes orders of magnitude smaller than classical machine learning methods, effectively addressing the curse of dimensionality that often plagues biological machine learning applications. For gene essentiality predictions, hybrid models demonstrate 15-30% improvement over FBA alone, particularly for genes with complex regulatory effects or in conditions where cellular objectives may shift.

Table 2: Performance Comparison of Metabolic Modeling Approaches

| Modeling Approach | Accuracy (Unicellular) | Accuracy (Multicellular) | Data Requirements | Computational Complexity |

|---|---|---|---|---|

| Flux Balance Analysis | 70-85% | 45-60% | Low | Low |

| Kinetic Modeling | 80-90% | 60-75% | Very High | Very High |

| Hybrid Neural-Mechanistic | 85-95% | 70-85% | Medium | Medium-High |

| 13C-Flux Analysis | >90% | 75-85% | High | Medium |

Experimental Protocols for Model Validation

Genome-Scale 13C-Flux Analysis Protocol

13C-flux analysis provides experimental validation of metabolic model predictions by quantifying intracellular metabolic fluxes using stable isotope tracers. The protocol consists of two main steps: (1) analytical identification of metabolic flux ratios using probabilistic equations derived from 13C distribution in proteinogenic amino acids, and (2) estimation of absolute fluxes from physiological data and the flux ratios as constraints. This approach has been successfully applied to quantify flux responses to genetic perturbations in Saccharomyces cerevisiae, revealing that approximately half of the 745 biochemical reactions in the network were active during growth on glucose.

The experimental workflow begins with cultivating organisms in minimal media containing a mixture of 13C-labeled and unlabeled carbon sources, typically 20% [U-13C] and 80% unlabeled glucose. After achieving steady-state growth, samples are harvested for analysis of 13C incorporation into proteinogenic amino acids using gas chromatography-mass spectrometry (GC-MS). The labeling patterns are then used to compute seven independent metabolic flux ratios that serve as constraints for flux estimation. Finally, absolute intracellular fluxes are calculated using a compartmentalized stoichiometric model that incorporates uptake/production rates and the determined flux ratios.

Gene Essentiality and Robustness Analysis

Systematic analysis of gene essentiality provides critical validation data for metabolic models. The experimental protocol involves constructing prototrophic deletion mutants for genes encoding metabolic enzymes, followed by quantitative assessment of growth phenotypes in defined media. In a comprehensive study of Saccharomyces cerevisiae, 38 genes encoding 28 potentially flexible and highly active reactions were deleted, encompassing pathways including the pentose phosphate pathway, TCA cycle, glyoxylate cycle, polysaccharide synthesis, mitochondrial transporters, and by-product formation.

Fitness defects are quantified by determining maximum specific growth rates in minimal and complex media using well-aerated microtiter plate systems. Mutant fitness is expressed as normalized growth rate relative to the reference strain. Physiological data quantify the fitness defect, while 13C-flux analysis identifies intracellular mechanisms that confer robustness to the deletion. This integrated approach revealed that for the 207 viable mutants of active reactions in yeast, network redundancy through duplicate genes was the major mechanism (75%) and alternative pathways the minor mechanism (25%) of genetic network robustness.

Research Reagent Solutions for Metabolic Modeling

Table 3: Essential Research Reagents and Databases for Metabolic Modeling

| Reagent/Database | Type | Function | Application in Plant Systems |

|---|---|---|---|

| KEGG | Database | Bioinformatics database containing genes, proteins, reactions, pathways | Reference for pathway annotation and comparative analysis |

| BioCyc/MetaCyc | Database | Encyclopedia of experimentally defined metabolic pathways and enzymes | Curation of plant-specific metabolic pathways |

| BRENDA | Database | Comprehensive enzyme information with kinetic parameters | Kinetic parameter estimation for plant enzyme reactions |

| [U-13C] Glucose | Isotope Tracer | 13C-labeling for experimental flux determination | Quantification of in vivo fluxes in plant tissues |

| Pathway Tools | Software | Bioinformatics software for pathway/genome database construction | Plant metabolic pathway visualization and analysis |

| ModelSEED | Online Resource | Automated reconstruction of genome-scale metabolic models | Draft model generation for non-model plant species |

| Cobrapy | Python Library | Constraint-based modeling of metabolic networks | FBA simulation and analysis of plant GEMs |

Visualization of Metabolic Modeling Workflows

Genome-Scale Metabolic Reconstruction Pipeline

Hybrid Neural-Mechanistic Modeling Architecture

Applications in Plant Biosystems Design

Mechanistic modeling of plant metabolic networks has become an indispensable tool for plant biosystems design, enabling predictive manipulation of plant systems for improved traits and performance. The application of GEMs to plants allows researchers to interrogate the complex interactions between different metabolic pathways, subcellular compartments, and cell types that characterize plant systems. Plant biosystems design seeks to accelerate plant genetic improvement using genome editing and genetic circuit engineering or create novel plant systems through de novo synthesis of plant genomes.

Several theoretical approaches support plant biosystems design, including graph theory for visualizing plant system structure, mechanistic models linking genes to phenotypic traits, and evolutionary dynamics theory for predicting genetic stability. From the perspective of biosystems design, a plant biosystem can be defined as a dynamic network of genes and multiple intermediate molecular phenotypes distributed in a four-dimensional space: three spatial dimensions of structure and one temporal dimension. Mechanistic modeling provides the framework to navigate this complexity and make informed engineering decisions.

Current challenges in plant metabolic modeling include the construction of genome-scale metabolic/regulatory networks with labeled subnetworks, mathematical modeling of these networks for accurate phenotype prediction, sharing of consensus predictive models, insufficient knowledge of network linkages, and limited data on metabolite concentrations in different compartments and cell types. Advances in single-cell/single-cell-type omics are critically required to address these challenges and advance the field of plant biosystems design.

Plant biosystems design represents a paradigm shift in plant science, moving from traditional trial-and-error approaches toward innovative strategies grounded in predictive models of biological systems [10] [11]. This emerging interdisciplinary field aims to accelerate plant genetic improvement through genome editing and genetic circuit engineering, potentially creating novel plant systems via de novo synthesis of plant genomes [10]. Within this comparative context, evolutionary dynamics theory provides essential frameworks for evaluating the performance and potential of various biosystems design approaches.

A fundamental challenge in engineered biological systems is their stability over evolutionary time in the absence of selective pressure [12]. The capacity to generate adaptive variation—termed evolvability—is itself a trait that can evolve through natural selection [13]. This review employs a comparative framework to analyze two contrasting strategies for managing evolutionary dynamics: (1) engineering for evolutionary robustness to maintain functional stability, and (2) harnessing evolvability to enhance adaptive potential. Through explicit comparison of these approaches, we provide researchers with critical insights for selecting appropriate strategies based on their specific application requirements.

Theoretical Foundations: Plasticity, Robustness, and Evolvability

The evolutionary dynamics of biological systems are governed by the interplay between three fundamental properties: phenotypic plasticity, robustness, and evolvability. Understanding these concepts is essential for predicting genetic stability and designing systems with controlled evolutionary potential.

Phenotypic plasticity and robustness represent two complementary aspects of developmental evolution. Plasticity concerns the sensitivity of phenotypic expression to environmental and genetic changes, while robustness describes the degree of insensitivity to such perturbations [14]. The variance of phenotype distribution characterizes both properties, with sensitivity increasing with variance. Theoretical models demonstrate that the response ratio is proportional to phenotypic variance, extending the fluctuation-response relationship from statistical physics to evolutionary biology [14].

Through robust evolution, phenotypic variance caused by genetic change decreases in proportion to developmental noise. This evolution toward increased robustness occurs only when developmental noise is sufficiently large, indicating that robustness to environmental fluctuations leads to robustness to genetic mutations [14]. These general relationships hold across different phenotypic traits and have been validated through macroscopic phenomenological theory, gene-expression dynamics models, and laboratory selection experiments [14].

Table 1: Theoretical Properties Governing Evolutionary Dynamics

| Property | Definition | Measurement Approach | Relationship to Variance |

|---|---|---|---|

| Phenotypic Plasticity | Response of phenotype to environmental/genetic changes | Response ratio to controlled perturbations | Proportional to phenotype variance |

| Robustness | Insensitivity to environmental/genetic perturbations | Inverse of phenotype variance | Inversely proportional to variance |

| Evolvability | Capacity to generate heritable adaptive variation | Rate of adaptive mutations in fluctuating environments | Dependent on variance in mutational mechanisms |

Evolvability represents the capacity of a population to generate heritable variation that facilitates adaptation to new environments or selection pressures [15]. Recent theoretical work suggests that evolvability itself can evolve as a phenotypic trait, with natural selection potentially shaping genetic systems to enhance future adaptation capacity [16] [13]. This challenges traditional perspectives that view evolution as merely a "blind" process driven by random mutations, suggesting instead that selection can favor mechanisms that channel mutations toward adaptive outcomes [16].

Figure 1: Theoretical Relationships Between Evolutionary Properties. This diagram illustrates how environmental fluctuations, genetic variation, and developmental noise contribute to phenotypic variance, which in turn influences plasticity, robustness, and evolvability.

Comparative Analysis of Engineering Approaches

Evolutionary Robustness Engineering in Genetic Circuits

Engineering evolutionary robustness focuses on designing genetic systems that maintain functional stability over multiple generations, particularly in the absence of selective pressure. This approach prioritizes predictable, consistent performance—a critical requirement for many agricultural and industrial applications.

Experimental studies with Escherichia coli have identified specific design principles that enhance evolutionary robustness. One foundational experiment measured the stability of BioBrick-assembled genetic circuits (T9002 and I7101) propagated over multiple generations, identifying the mutations that caused loss-of-function [12]. The T9002 circuit lost function in less than 20 generations due to a deletion between two homologous transcriptional terminators. When researchers re-engineered this circuit with non-homologous terminators, the evolutionary half-life improved over 2-fold. Further stability gains (over 17-fold) were achieved by combining non-homologous terminators with a 4-fold reduction in expression level [12].

The second circuit, I7101, lost function in less than 50 generations due to a deletion between repeated operator sequences in the promoter. This circuit was subsequently re-engineered with different promoters from a promoter library, demonstrating that evolutionary stability dynamics could be modulated through careful design [12]. Across all experiments, a clear relationship emerged: evolutionary half-life exponentially decreases with increasing expression levels, highlighting the fundamental trade-off between function and stability.

Table 2: Comparative Performance of Engineered Genetic Circuits

| Circuit Design | Evolutionary Half-Life (generations) | Primary Failure Mechanism | Stability Enhancement Strategy | Resulting Improvement |

|---|---|---|---|---|

| T9002 (Original) | <20 | Deletion between homologous terminators | N/A | Baseline |

| T9002 (Non-homologous Terminators) | >40 | Multiple distributed mutations | Eliminate sequence repeats | 2-fold stability increase |

| T9002 (Low Expression + Non-homologous Terminators) | >340 | Not determined | Reduce expression 4-fold + eliminate repeats | 17-fold stability increase |

| I7101 (Original) | <50 | Deletion between operator sequences | N/A | Baseline |

| I7101 (Promoter Variants) | Variable (25-100+) | Promoter-specific mutations | Library screening for stable promoters | Up to 2-fold stability increase |

Harnessing Evolvability for Adaptive Systems

In contrast to robustness engineering, evolvability-based approaches intentionally incorporate mechanisms that enhance a system's capacity to adapt to changing environments. This strategy is particularly valuable for applications in unpredictable environments or when designing systems that require ongoing optimization.

A landmark study provided experimental evidence that natural selection can shape genetic systems to enhance future adaptation capacity [16]. Researchers subjected microbial populations to an intense selection regime requiring repeated transitions between two phenotypic states under fluctuating environmental conditions. Lineages unable to develop the required phenotype were eliminated and replaced by successful ones, creating conditions for selection to operate at the level of lineages [16].

Through analysis of more than 500 mutations, the study revealed the emergence of a localized hyper-mutable genetic mechanism in certain microbial lineages. This hypermutable locus exhibited a mutation rate up to 10,000 times higher than the original lineage and enabled rapid, reversible transitions between phenotypic states through a mechanism analogous to contingency loci in pathogenic bacteria [16] [13]. Subsequent evolution demonstrated that the hypermutable locus is itself evolvable with respect to alterations in the frequency of environmental change [13].

Theoretical models support these experimental findings, revealing robust adaptive trajectories where highly evolvable individuals rapidly explore the phenotypic landscape to locate optimal fitness peaks [15]. Mathematical simulations of stochastic individual-based models show that populations follow a "first explore, then settle" pattern, where evolvability is initially beneficial but becomes costly once optimal phenotypes are established [15].

Experimental Protocols and Methodologies

Evolutionary Stability Assessment Protocol

The experimental measurement of evolutionary stability follows a standardized serial propagation approach that enables quantitative assessment of functional half-life:

Strain Construction: Clone genetic circuits into appropriate vectors (typically high-copy plasmids to maximize selective pressure and accelerate evolutionary dynamics).

Serial Propagation: Dilute cultures daily to allow approximately 10 generations per day, transferring to fresh media at fixed intervals.

Function Monitoring: Regularly sample populations and measure circuit function under inducing conditions (e.g., fluorescence after inducer addition for reporter circuits).

Normalization: Express function as normalized values (e.g., fluorescence divided by cell density) to account for population density variations.

Mutation Analysis: Isolate non-functional clones and sequence entire circuits to identify loss-of-function mutations.

Reconstruction Validation: Transform mutant plasmids back into progenitor strains to confirm they cause observed functional losses.

This protocol revealed that loss-of-function mutations encompass a wide variety of types including point mutations, small insertions and deletions, large deletions, and insertion sequence (IS) element insertions that frequently occur in scar sequences between biological parts [12].

Evolvability Selection Experiment Protocol

Experimental evolution of evolvability requires careful design to create conditions where enhanced adaptation capacity is advantageous:

Fluctuating Environment Design: Establish conditions requiring repeated transitions between phenotypic states (e.g., alternating between two metabolic requirements or environmental conditions).

Lineage-Level Selection: Implement a regime where entire lineages are eliminated if they cannot achieve required phenotypic transitions and are replaced by successful lineages.

Intense Selection Pressure: Maintain conditions where success depends specifically on the capacity to evolve between phenotypic states rather than static performance.

Long-Term Propagation: Continue experiments over extended periods (e.g., three years in the referenced study) to allow complex evolutionary solutions to emerge.

Comprehensive Mutation Analysis: Sequence multiple evolved lineages to identify mutations and quantify mutation rates in specific genomic regions.

Validation of Adaptive Potential: Test whether evolved mechanisms genuinely enhance future adaptation capacity by challenging lineages with novel environmental conditions.

This approach demonstrated that through multi-step evolutionary processes, populations can evolve specialized genetic mechanisms that enhance their capacity for future adaptation [16] [13].

Figure 2: Experimental Workflows for Assessing Evolutionary Properties. The diagram compares two methodological approaches: robustness assessment (top) focuses on stability measurement and re-engineering, while evolvability selection (bottom) examines the emergence of adaptive mechanisms.

The Scientist's Toolkit: Research Reagent Solutions

Successful research in evolutionary dynamics requires specialized reagents and tools designed for precise manipulation and monitoring of genetic systems.

Table 3: Essential Research Reagents for Evolutionary Dynamics Studies

| Reagent/Tool | Function | Application Examples | Performance Considerations |

|---|---|---|---|

| BioBrick Standard Biological Parts | Modular DNA sequences encoding basic biological functions | Circuit construction using standardized assembly | Well-characterized parts improve predictability; scar sequences can affect stability |

| Inducible Promoter Systems | Enable controlled gene expression in response to chemical inducers | Tunable expression to balance function and metabolic load | Reduce evolutionary stability cost; allow expression optimization |

| Fluorescent Reporter Proteins | Quantitative monitoring of circuit function | Real-time tracking of functional stability during evolution | Enable high-throughput screening of population dynamics |

| Hypermutable Contingency Loci | Targeted genetic regions with elevated mutation rates | Enhanced adaptation in fluctuating environments | Can increase mutation rates 10,000-fold; must be carefully controlled |

| Synthetic Genetic Circuits | Pre-assembled functional units for specific operations | Rapid prototyping of engineered systems | Evolutionary stability varies with design; repeated sequences decrease stability |

Comparative Performance in Applied Contexts

The choice between robustness-focused and evolvability-focused design strategies depends critically on the application context and performance requirements.

For industrial and agricultural applications where consistent, predictable performance is paramount, evolutionary robustness principles provide essential guidance: minimize repeated sequences, use inducible promoters, optimize expression levels to balance function and stability, and avoid unnecessary homology between genetic elements [12]. These approaches prioritize long-term functional stability, making them ideal for contained, controlled environments.

In contrast, for environmental applications or contexts with unpredictable fluctuating conditions, evolvability-enhancing strategies may offer superior performance. The development of targeted hypermutable loci enables populations to adapt rapidly to changing conditions, essentially building "adaptive foresight" into the genetic architecture [16]. This approach mirrors natural contingency mechanisms used by pathogenic bacteria to evade host immune systems.

Mathematical modeling reveals that the interaction between selection strength and evolvability cost determines optimal strategy selection [15]. When both selection and cost are highly constraining, highly evolvable populations may face extinction risk. However, in moderately constrained environments, evolvability provides significant advantages for long-term persistence and function.

The comparative analysis of evolutionary robustness and evolvability strategies reveals complementary strengths that can be integrated in advanced plant biosystems design. Robustness principles provide the foundation for stable system performance, while evolvability mechanisms offer flexibility in responding to unpredictable challenges.

Future research priorities should include developing mathematical models that quantitatively predict evolutionary dynamics across different plant systems, creating new tools for controlling mutational processes with temporal and spatial precision, and establishing standardized metrics for comparing evolutionary performance across diverse biological contexts [10]. Additionally, social responsibility requires careful consideration of how enhanced evolvability mechanisms might be controlled in environmental applications, with strategies for improving public understanding and acceptance of these technologies [10] [11].

By applying the comparative framework presented here, researchers can make informed decisions about design strategy selection based on their specific performance requirements, environmental contexts, and risk tolerance. This approach will accelerate the development of plant biosystems that effectively balance the competing demands of stability and adaptability—an essential advancement for meeting global challenges in food security, environmental sustainability, and climate resilience.

Shift from Trial-and-Error to Predictive Design in Plant Genetic Improvement

Plant genetic improvement is undergoing a fundamental transformation, moving from traditional trial-and-error approaches to sophisticated predictive design frameworks. This shift represents a critical evolution in how researchers engineer plant traits, leveraging computational models, synthetic biology, and advanced data analytics to accelerate genetic gain. Where traditional methods relied heavily on phenotypic selection through multiple breeding cycles, predictive design employs quantitative characterization of genetic parts, mathematical modeling of biological systems, and in silico prediction of variant effects to precisely engineer desired phenotypes [17] [1] [18]. This comparative analysis examines the performance of these contrasting approaches across multiple dimensions, providing researchers with empirical data to guide methodological selection.

The emerging field of plant biosystems design represents this paradigm shift, seeking to accelerate plant genetic improvement using genome editing and genetic circuit engineering based on predictive models of biological systems [1]. This approach stands in stark contrast to conventional breeding, which depends on extensive field testing and phenotypic evaluation over numerous generations. As computational power increases and biological characterization improves, predictive design methodologies are demonstrating significant advantages in efficiency, accuracy, and scalability for plant genetic improvement.

Comparative Performance Analysis: Quantitative Data

Table 1: Direct Performance Comparison Between Traditional and Predictive Approaches

| Performance Metric | Traditional Trial-and-Error | Predictive Design Framework | Experimental Context |

|---|---|---|---|

| Development Timeline | >2 months per design-test cycle [17] | ~10 days for part characterization [17] | Circuit design in Arabidopsis and Nicotiana benthamiana |

| Prediction Accuracy | Not quantitatively characterized | R² = 0.81 for circuit behavior [17] | 21 two-input genetic circuits with various logic functions |

| Genetic Gain Accuracy | Subject to environmental contamination effects [19] | 2-3 times higher prediction accuracy for yield traits [20] | Multi-environment genomic prediction in spring wheat |

| Experimental Variation | High batch-to-batch variability [17] | Significantly reduced via Relative Promoter Units [17] | Protoplast transfection system standardization |

| Heritability Estimation | Biased by outliers and non-normality [19] | Robust methods minimize outlier effects [19] | Commercial maize and rye breeding datasets |

Table 2: Multi-Environment Genomic Prediction Accuracy in Spring Wheat

| Trait | Single-Environment Model | Multi-Environment Model | Improvement |

|---|---|---|---|

| Grain Yield (GRYLD) | Low accuracy | 2-3x higher accuracy [20] | >200% increase |

| Thousand-Grain Weight | Low accuracy | 2-3x higher accuracy [20] | >200% increase |

| Days to Heading | Low accuracy | 2-3x higher accuracy [20] | >200% increase |

| Days to Maturity | Low accuracy | 2-3x higher accuracy [20] | >200% increase |

Experimental Protocols in Predictive Design

Quantitative Genetic Circuit Characterization Framework

The predictive design framework established for plant genetic circuits involves a standardized pipeline for quantitative characterization of genetic parts [17]. The protocol begins with establishment of a robust transient expression system using Arabidopsis leaf mesophyll protoplast transfection. Firefly luciferase serves as the primary reporter, with a normalization module featuring β-glucuronidase driven by a 200-bp 35S promoter to reduce variation. The critical innovation is the implementation of Relative Promoter Units (RPUs), which provide a relative measure of promoter strength compared to a reference promoter within each protoplast batch. This normalization strategy significantly reduces batch and experimental setup variation, enabling reproducible and comparative analyses.

For modular synthetic promoter design, researchers employ the strong constitutive 200-bp 35S promoter as backbone, replacing DNA sequences with operators of TetR family repressors at specific sites while maintaining overall promoter length [17]. Evaluation of repression ability involves co-expressing both promoter and repressor on the same plasmid, with nuclear localization signal added at the C-terminal of the repressor. The input-output characteristics of sensors and NOT gates are quantified using Hill equations to parameterize response functions, enabling predictive circuit design.

Multi-Environment Genomic Prediction Methodology

For genomic selection in breeding programs, the multi-environment predictive protocol involves several standardized steps [20]. Plant material consisting of advanced breeding lines is evaluated in randomized block designs across multiple locations and years, with each location-year combination treated as a distinct environment. Phenotypic evaluation generates best linear unbiased predictions for traits of interest using mixed models that account for environment, replication, genotype, and genotype-by-environment interaction effects.

Genotyping employs genotyping-by-sequencing with Illumina platforms, followed by SNP calling using TASSEL-GBS pipeline and imputation with Beagle. After quality control filtering, polymorphic SNPs are used for genomic prediction modeling. The multi-environment model incorporates both genetic and environmental variance components, with predictive accuracy assessed through cross-validation schemes that mimic actual breeding scenarios: predicting untested lines in tested environments and predicting tested lines in untested environments.

Research Reagent Solutions Toolkit

Table 3: Essential Research Reagents for Predictive Plant Biosystems Design

| Reagent/Category | Function/Application | Specific Examples |

|---|---|---|

| Orthogonal Sensors | Chemical sensing and input response | Auxin sensor (GH3.3 promoter), Cytokinin sensor (TCSn) [17] |

| Modular Synthetic Promoters | Repressible genetic parts for circuit design | 35S backbone with TetR family operators (PhlF, IcaR, BM3R1) [17] |

| Reporter Systems | Quantitative characterization of parts | Firefly luciferase (LUC), β-glucuronidase (GUS) [17] |

| Genome Editing Tools | Precise genetic modifications | CRISPR-Cas systems for targeted genome editing [18] |

| Genotyping Platforms | Genome-wide marker data for prediction | GBS SNPs, Illumina HiSeq platforms [20] |

| Bioinformatic Software | Data analysis and model implementation | TASSEL v5.2.43, META-R, OD-V2 for design [21] [20] |

Workflow and System Diagrams

Predictive vs Traditional Plant Breeding Workflow

Predictive Genetic Design Experimental Flow

Discussion: Performance Advantages and Limitations

The comparative data demonstrates clear advantages of predictive design approaches across multiple performance metrics. The 21x reduction in characterization time (from >2 months to ~10 days) represents a fundamental acceleration in the design-build-test-learn cycle [17]. This efficiency gain is complemented by substantially improved prediction accuracy, with multi-environment genomic selection models providing 2-3 times higher accuracy for complex traits like grain yield compared to single-environment models [20].

The quantitative framework for genetic circuit design in plants achieves remarkable prediction accuracy (R² = 0.81) for circuit behavior, enabling programmable multi-state phenotype control [17]. This precision stems from rigorous standardization using Relative Promoter Units, which effectively reduces experimental variation that has traditionally plagued plant synthetic biology. Furthermore, robust statistical methods for heritability estimation and genomic prediction minimize the deleterious effects of outliers that commonly compromise traditional breeding analyses [19].

However, predictive design approaches face limitations in model generalizability across diverse genetic backgrounds and environmental conditions. While sequence-based AI models show promise for variant effect prediction, their practical value in plant breeding requires rigorous validation [18]. Additionally, the development of comprehensive genome-scale models for plant systems remains challenging due to incomplete knowledge of gene functions, underground metabolism from enzyme promiscuity, and insufficient data on metabolite concentrations across cellular compartments [1].

The evidence clearly demonstrates that predictive design methodologies outperform traditional trial-and-error approaches across critical performance metrics including efficiency, accuracy, and scalability. However, the most effective plant genetic improvement strategies will likely integrate elements from both paradigms—leveraging the predictive power of computational models and synthetic circuits while maintaining the empirical validation of field-based testing. As computational capabilities advance and biological characterization improves, the shift toward predictive design will accelerate, potentially enabling de novo synthesis of plant genomes and revolutionary approaches to crop improvement.

Future developments in AI-driven predictive models [22] [18], multi-omics integration [22], and enhanced experimental design using genetic relatedness [21] will further strengthen the predictive design paradigm. Plant biosystems design represents not merely an incremental improvement but a fundamental transformation in how we understand, engineer, and improve plants to meet global challenges in food security and sustainable agriculture.

The fields of systems biology, synthetic biology, and data science are undergoing a transformative convergence, creating a new paradigm for plant biosystems design. This interdisciplinary approach represents a fundamental shift from traditional trial-and-error methods toward predictive, model-driven engineering of plant systems [1]. Where synthetic biology applies engineering principles to redesign biological systems, and systems biology seeks to understand them holistically, data science provides the computational framework to bridge understanding and implementation [23] [24]. This integration enables researchers to move beyond simple genetic modifications toward comprehensive pathway engineering and genome redesign, with profound implications for developing climate-resilient crops, sustainable bioproduction, and pharmaceutical manufacturing [1] [25]. The convergence is accelerating through key enabling technologies including high-throughput DNA synthesis, genome editing, and artificial intelligence/machine learning (AI/ML) applications, collectively forming a new engineering discipline for biological systems [23].

Comparative Performance of Integrated Approaches in Plant Biosystems Design

Analytical Framework and Performance Metrics

The comparative performance of different plant biosystems design approaches can be evaluated through multiple quantitative metrics that reflect their efficiency, precision, and predictive capability. The table below summarizes key performance indicators across the interdisciplinary spectrum.

Table 1: Performance Metrics for Plant Biosystems Design Approaches

| Approach | Engineering Precision | Pathway Complexity | Predictive Accuracy | Development Cycle Time | Scalability |

|---|---|---|---|---|---|

| Conventional Genetic Engineering | Low to Moderate (single gene focus) | Limited (1-3 genes) | Low (empirical testing required) | Months to years | Moderate |

| Synthetic Biology Toolkit | High (standardized parts) | Moderate (5-10 genes) | Moderate (characterized parts) | Weeks to months | High |

| Systems Biology Modeling | Theoretical (insight generation) | High (network-level) | Variable (model-dependent) | N/A (foundational) | Limited |

| Integrated Data Science Approach | High (model-informed) | Very High (10+ genes) | High (ML-improved) | Days to weeks | Very High |

Quantitative Comparison of Engineering Outcomes

Direct comparison of experimental outcomes demonstrates the performance advantages of integrated approaches. The following table synthesizes quantitative results from published studies applying these methodologies to specific plant engineering challenges.

Table 2: Experimental Performance Comparison Across Plant Engineering Studies

| Engineering Target | Approach | Gene Parts | Product Yield | Time to Result | Key Enabling Technologies |

|---|---|---|---|---|---|

| Etoposide Precursor | Synthetic Biology + Transient Expression | 8 genes | Milligram scale (two orders magnitude increase) | Weeks | Golden Gate assembly, N. benthamiana transient expression [25] |

| Vinblastine Biosynthesis | Systems Biology + Pathway Discovery | 31 enzymes identified | Not quantified | Years (pathway elucidation) | RNA-seq, co-expression analysis, heterologous expression [25] |

| Montbretin A (MbA) | Full Pathway Heterologous Expression | Multiple pathway genes | Measurable but low yield | Months | Transcriptomics, metabolomics, synthetic biology [25] |

| Plant Morphology | Synthetic Developmental Biology | Variable logic gates | Precision tissue patterning | Months to years | Cell type-specific promoters, logic gates [26] |

| Rice Phenotyping | Data Science + Imaging | N/A | High-throughput 3D phenotyping | Real-time analysis | Computer vision, deep learning [27] |

Experimental Protocols and Methodologies

The Design-Build-Test-Learn (DBTL) Cycle in Plant Synthetic Biology

The DBTL cycle represents a foundational experimental framework in integrated plant biosystems design. This iterative engineering approach provides a systematic methodology for optimizing genetic constructs and metabolic pathways [23].

Detailed Experimental Protocol:

Design Phase:

- Objective: Design genetic circuits or metabolic pathways using standardized biological parts.

- Methods: Utilize bioinformatics databases and metabolic design software (e.g., Cello CAD for logic gates) [23].

- Data Integration: Incorporate genomic, transcriptomic, proteomic, and metabolomic data to inform model construction [23].

- Output: Specification of DNA parts and assembly strategy.

Build Phase:

- Objective: Physically construct designed genetic systems.

- Methods: Employ standardized DNA assembly frameworks like Golden Gate technology (MoClo, GoldenBraid) with Phytobrick standard parts [26].

- Host Systems: Use Agrobacterium tumefaciens for dicot transformation or specialized vectors (e.g., pMIN from JMC toolkit) for both monocots and dicots [26].

- Output: Multigene constructs ready for plant transformation.

Test Phase:

- Objective: Characterize system performance in biological context.

- Methods: Transient expression in Nicotiana benthamiana for rapid testing; stable transformation in target species [25].

- Analysis: Deploy advanced analytics including next-generation sequencing and mass spectrometry to quantify performance [23].

- Output: Quantitative data on transgene expression, metabolite production, and phenotypic effects.

Learn Phase:

- Objective: Refine models and designs based on experimental data.

- Methods: Apply machine learning and artificial intelligence to analyze performance data and identify optimization targets [23].

- Output: Improved designs for subsequent DBTL cycle.

The following workflow diagram illustrates the DBTL cycle:

Genome-Scale Modeling for Metabolic Engineering

Genome-scale models (GEMs) represent a key systems biology approach for predicting plant cellular phenotypes and guiding metabolic engineering strategies.

Detailed Experimental Protocol:

Network Reconstruction:

- Objective: Construct a biochemical network from genomic and omics data.

- Methods: Curate reactions, metabolites, and genes from databases (e.g., AraGEM for Arabidopsis) [1].

- Compartmentalization: Account for subcellular localization of metabolic processes.

Constraint-Based Modeling:

- Objective: Predict metabolic fluxes under steady-state conditions.

- Methods: Apply Flux Balance Analysis (FBA) or Elementary Mode Analysis (EMA) with appropriate objective functions (e.g., biomass maximization) [1].

- Tools: Use computational frameworks like COBRA or RAVEN Toolbox.

Experimental Validation:

- Objective: Test model predictions through isotopic labeling.

- Methods: Conduct ¹³C-labeling experiments (e.g., with ¹³CO₂) to measure intracellular fluxes [1].

- Analysis: Use mass spectrometry to track isotopic enrichment and compute metabolic flux rates.

Model Refinement:

- Objective: Improve model accuracy through iterative testing.

- Methods: Incorporate transcriptomic, proteomic, and metabolomic data to constrain model predictions [1].

- Output: Refined GEM with improved predictive capability for plant biosystems design.

Signaling Pathways and Network Analysis in Plant Systems

Graph Theory Applications in Plant Gene-Metabolite Networks

Plant biosystems can be represented as complex networks where molecular components interact across multiple spatial and temporal dimensions. Graph theory provides a mathematical framework for analyzing these networks and identifying key regulatory motifs [1].

Network Components and Relationships:

- Nodes: Represent biological entities (genes, RNAs, proteins, metabolites)

- Edges: Represent interactions (protein-protein, protein-DNA, protein-metabolite)

- Network Motifs: Recurring regulatory patterns including:

- Feed-forward loops: Control timing and pulse generation in responses

- Feedback loops: Provide stability or enable bistable switches

- Spatial Dimension: Networks operate across cellular, tissue, and organ levels

- Temporal Dimension: Dynamics vary across timescales (circadian, developmental, seasonal)

The following diagram illustrates a plant gene-metabolite network with key regulatory motifs:

Metabolic Network Modeling and Analysis

Mechanistic modeling of cellular metabolism enables prediction of plant phenotypes from genetic and environmental perturbations. This approach applies mass conservation principles to quantify metabolic fluxes [1].

Pathway Modeling Components:

- Reaction Networks: Convert biochemical pathways into mathematical representations

- Ordinary Differential Equations: Describe metabolite concentration changes over time

- Flux Balance Analysis: Predict steady-state metabolic fluxes using optimization

- Elementary Mode Analysis: Identify all possible metabolic pathway routes

The modeling workflow begins with genome sequence data, incorporates omics datasets to construct metabolic networks, applies constraint-based analysis techniques, and ultimately predicts cellular phenotypes that can inform plant biosystems design strategies.

The Scientist's Toolkit: Essential Research Reagents and Solutions

DNA Assembly Frameworks and Toolkits

Standardized genetic part assembly systems form the foundation of plant synthetic biology, enabling rapid construction of complex genetic circuits.

Table 3: DNA Assembly Toolkits for Plant Synthetic Biology

| Toolkit Name | Assembly Strategy | Cloning Capacity | Key Features | Compatible Hosts |

|---|---|---|---|---|

| MoClo Assembly | Linear hierarchy (Levels 0-1-2) | 7 transcriptional units (expandable) | Widely adopted, extensive part collections | Dicots via A. tumefaciens [26] |

| Joint Modular Cloning (JMC) | Linear hierarchy | 7 TUs (expandable) | Uses PaqCI enzyme, works in monocots and dicots | N. benthamiana, S. viridis [26] |

| GoldenBraid | Cyclical (unlimited) | Unlimited through level cycling | Synthetic promoter tools, standardized parts | Dicot systems [26] |

| Loop Assembly | Cyclical (unlimited) | Unlimited through level cycling | Open MTA license, Marchantia parts | Dicot systems [26] |

| MAPS | Cyclical (unlimited) | Unlimited through level cycling | Methylation-compatible, AarI enzyme | Dicot systems [26] |

The integration of data science requires specialized computational tools for biological data analysis and model construction.

Table 4: Essential Computational Tools for Integrated Plant Biosystems Design

| Tool Category | Specific Tools/Platforms | Function | Application Example |

|---|---|---|---|

| Genome Sequencing | Illumina, PacBio, Oxford Nanopore | Generate genomic data | De novo genome assembly, variant calling [24] |

| Pathway Discovery | Phylogenomics, co-expression analysis | Identify biosynthetic genes | Elucidate colchicine pathway from Gloriosa superba [25] |

| Metabolic Modeling | COBRA, RAVEN, FBA | Predict metabolic fluxes | AraGEM for Arabidopsis metabolism [1] |

| Machine Learning | TensorFlow, PyTorch, scikit-learn | Predictive model building | TIS prediction in Arabidopsis [27] |

| Network Analysis | Cytoscape, Graph theory algorithms | Visualize and analyze interactions | Gene-metabolite network construction [1] |

Host Engineering and Transformation Systems

Effective implementation of designed genetic systems requires specialized host platforms and delivery methods.

Table 5: Host Systems for Plant Synthetic Biology Applications

| Host Platform | Transformation Method | Advantages | Limitations | Ideal Applications |

|---|---|---|---|---|

| Nicotiana benthamiana | Agrobacterium-mediated transient | Rapid testing (days), high protein yield | Transient expression, non-food crop | Pathway prototyping, metabolite production [25] |

| Arabidopsis thaliana | Agrobacterium-mediated stable | Well-characterized genetics, rapid cycling | Small size, limited biomass | Basic research, proof-of-concept |

| Crop Species (Rice, Tomato) | Stable transformation | Direct application to agriculture | Lengthy process, genotype-dependent | Trait development, nutritional enhancement |

| Plant Cell Cultures | Bioreactor systems | Controlled environment, scalable | Dedifferentiation, metabolite variation | Pharmaceutical production |

The integration of systems biology, synthetic biology, and data science represents a paradigm shift in plant biosystems design, moving the field from descriptive analysis to predictive design and engineering. Performance comparisons demonstrate that integrated approaches outperform traditional single-gene methods in engineering precision, pathway complexity, and development efficiency [1] [26]. The DBTL cycle, supported by standardized genetic toolkits and computational modeling, enables iterative optimization that was previously impossible [23].

Future advances will depend on overcoming key technical challenges, including improving our understanding of gene functions and regulations, obtaining better compartmentalization data for metabolic modeling, and addressing the hidden "underground metabolism" resulting from enzyme promiscuity [1]. Additionally, ethical considerations and public perception must be carefully addressed to ensure responsible deployment of these powerful technologies [1]. As biological and data sciences continue their convergence, the plant biosystems design community is poised to address critical challenges in food security, sustainable agriculture, and pharmaceutical production through increasingly sophisticated and predictive engineering of plant systems [28].

Technical Approaches and Implementation Strategies for Plant Engineering

Genome editing technologies have revolutionized biological research and therapeutic development by enabling precise modifications to an organism's DNA. These tools function as molecular scissors, creating targeted double-stranded breaks (DSBs) in DNA that are subsequently repaired by the cell's natural repair mechanisms, leading to desired genetic alterations [29] [30]. The global market for genome editing is expanding rapidly, projected to grow from $10.8 billion in 2025 to $23.7 billion by 2030, reflecting a compound annual growth rate of 16.9% [31]. This growth is driven by advances in genetic engineering technologies, growing demand for targeted therapeutics, and increasing integration of genomics into clinical and agricultural applications [31].

The evolution of genome editing has progressed from early techniques like homologous recombination and RNA interference to more precise programmable nucleases including zinc finger nucleases (ZFNs), transcription activator-like effector nucleases (TALENs), and most recently, the CRISPR-Cas system [29] [32]. Each technology offers distinct advantages and limitations in terms of precision, efficiency, cost, and ease of use, making them suitable for different research and application contexts. Among these, CRISPR-Cas systems have emerged as particularly transformative due to their simplicity, efficiency, and versatility [33] [29]. This guide provides a comprehensive comparison of these technologies, with a focus on their applications in plant biosystems design and their relative performance characteristics based on experimental data.

Comparative Analysis of Major Genome Editing Platforms